The future of no-code was initially promised to be the democratization of software development, often likened to “building with Legos” using visual blocks and drag-and-drop interfaces to bring ideas to life. For years, this allowed small business owners and creative entrepreneurs to craft apps effortlessly without spending months learning to code.

However, as the landscape shifts toward 2025, a significant “no-code wall” has emerged: traditional tools are often excellent for simple internal utilities or basic websites, but they frequently lack the sophistication, scalability, and security required for production-grade applications.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildToday, a new generation of platforms is emerging moving beyond the “black box” of visual builders and into the era of Software Development Engineer (SDE)-level engineering standards. By leveraging advanced artificial intelligence, founders can now supercharge their apps and add AI features without coding, achieving a level of technical depth that previously required a full team of developers. This guide explores how to transition from mere prototyping to building high-performance, AI-driven software ecosystems with Imagine.bo, a platform designed for those who demand professional-grade results without the traditional technical debt.

The Limitations of Traditional No-Code for Production

Before moving forward, it is essential to understand why traditional no-code tools often fall short when a business scales. These tools typically offer a “what you see is what you get” interface that simplifies the building process but often hides the underlying logic.

- The “Black Box” and Data Silos: Many traditional platforms treat the backend as a black box. They might require external services like Airtable or Google Sheets for data storage, which can create fragmented ecosystems that are difficult to manage, secure, and scale. While connecting a spreadsheet to a web app is a fast way to build a CRM, it often lacks the robust relational logic and data persistence found in production-ready databases.

- Complexity and “The Wall”: As applications grow in complexity, founders often find themselves needing custom logic that the visual builder cannot provide. This often leads to a throwaway prototype a tool that works for a few users but breaks under high traffic or complex data requests. In contrast, next-generation AI platforms aim to ensure that generated code isn’t throwaway; it follows the same rules and best practices a human developer would follow, ensuring maintainability.

- The Integration Nightmare: Traditional tools often rely heavily on “glue code” platforms like Zapier to connect different services. While useful, excessive reliance on third-party connectors can introduce latency and security vulnerabilities. SDE-level platforms prefer direct API integrations, allowing you to connect your app to CRM systems and payment gateways natively.

Positioning Imagine.bo: Next-Generation SDE-Level Engineering

Imagine.bo is built on the philosophy that non-technical founders should have access to the same engineering standards as Silicon Valley tech giants. Unlike older builders, this platform utilizes a prompt-first workflow and vibe-coding principles to generate complete, production-ready applications from natural language descriptions.

What is “SDE-Level” No-Code? SDE-level engineering refers to software that includes a built-in database, robust authentication, secure hosting, and clean, standardized code. It means that when you describe an app idea, the AI doesn’t just “draw” a UI; it architecturally plans the frontend, the backend logic, and the relational database structures automatically.

The Imagine.bo Advantage: End-to-End Ownership For a production-grade app, you need a full-stack ecosystem that works in harmony. Imagine.bo provides end-to-end ownership over every layer:

- Frontend Precision: Platforms now allow you to upload design files (from tools like Figma) or describe a design style like “claymorphism”. The AI then generates a pixel-perfect, responsive UI matching those standards. Understanding frontend development basics helps, but the AI handles the heavy lifting of CSS grids and responsiveness.

- Production-Ready Backend: Instead of just simple triggers, these platforms build sophisticated server-side logic and visual workflows that define how your app reacts to complex user actions. This includes handling loops, conditionals, and complex data transformations.

- Integrated Relational Databases: High-end no-code builders eliminate the need for external database services. They provide built-in relational databases where individual user data persists across sessions, maintaining personal info, progress tracking, and custom settings securely.

- Security and Compliance: Production apps require user authentication such as Google or Gmail logins and rigorous privacy controls. Imagine.bo builds these into the foundation, ensuring that you follow app security best practices and individual user datasets remain private. This is critical for meeting GDPR compliance guidelines.

- Deployment and Scaling: One-click publishing to secure URLs allows for instant deployment. Production-grade platforms provide high uptime (averaging >99%) and handle the underlying infrastructure so you can focus on growth.

The Art of “Vibe Coding”: Prompt Engineering for Apps

The most significant shift in 2025 is the move from “click-based” building to “prompt-based” building. This is often called vibe coding, where the developer captures the feeling and intent of the software in natural language, and the AI translates that into executable code.

However, talking to an AI architect requires a specific skill set. It isn’t just about asking; it’s about asking correctly.

- Context is King: A prompt like “Build a CRM” will result in a generic tool. A prompt like “Build a custom CRM for retail associates that tracks customer clothing sizes and purchase history” provides the AI with the necessary data schema constraints.

- Iterative Refinement: You rarely get a perfect app in one shot. The workflow involves generating a base, testing it, and then using prompt engineering tips to refine specific elements. For example, “Change the dashboard to show a heatmap of sales data instead of a list.”

- Chain of Thought: When asking for complex logic, break it down. Tell the AI: “First, when the user clicks ‘Submit’, validate the email. Second, check if the email exists in the database. Third, if it does not exist, create a new record.” This step-by-step instruction reduces logic errors.

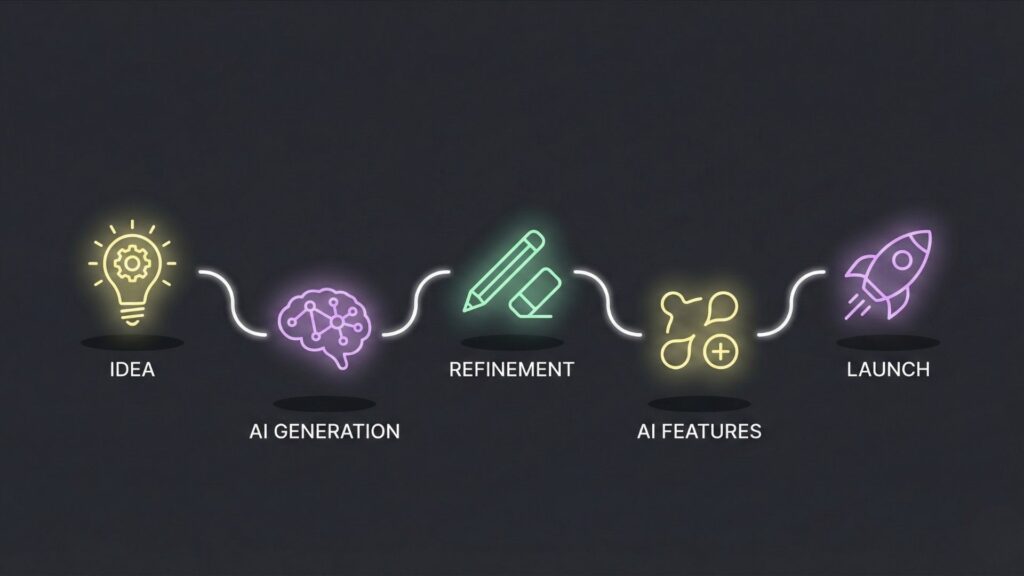

How to Supercharge Your App: The 5-Step Workflow

Building an AI-powered app is no longer about learning syntax; it is about learning “the choreography” of working with AI tools. To move from an idea to a live launch, follow this structured 5-step workflow:

Step 1: Clarity (Defining Your Objective) Every successful app begins with an absolute understanding of the pain point it addresses. Before prompting the AI, you must define the target audience and the primary problem. Failing to ask the right questions as a founder often leads to “cool” tools that don’t drive business results. You must decide if you are building a Minimum Viable Product (MVP) to test the waters or a full-scale enterprise tool.

Step 2: Prompting and AI Generation Once you have clarity, you “vibe-code” the initial application. You describe your app idea in plain English, including the required data structure and user access controls. A production-grade prompt should include user types (admin vs. basic), access controls (public/private), and core feature articulation.

Step 3: Iterative Refinement and Debugging AI generation happens quickly often within 15 to 20 minutes for a basic scaffold. However, the “last 10% polish” requires iterative refinement and debugging. This is where you apply color theory to enhance aesthetics and ensure the user experience is fluid. If a button doesn’t work, you don’t dig into code; you tell the AI, “The submit button is not clearing the form after data entry. Please fix the workflow.”

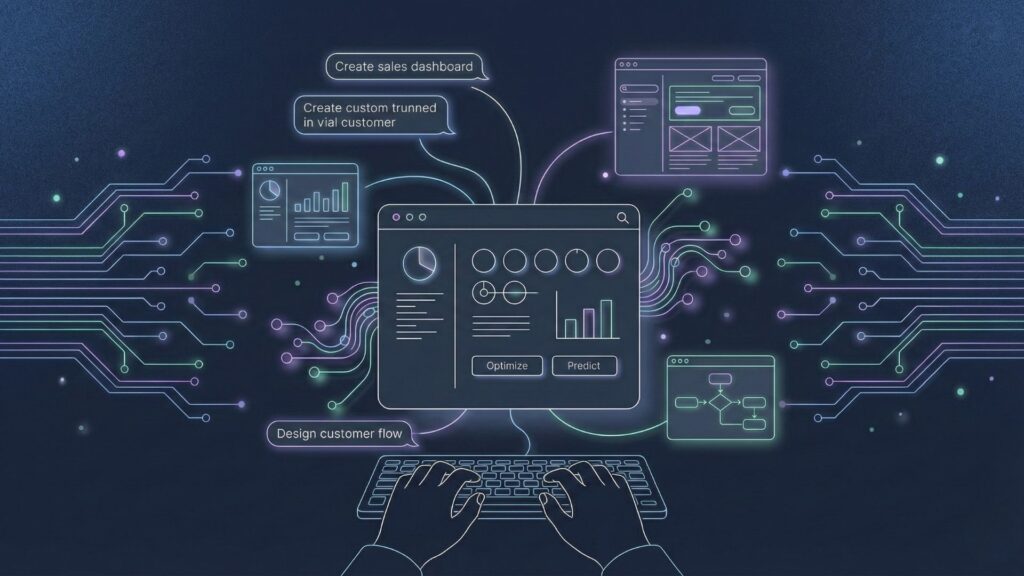

Step 4: Add AI Features Without Coding To truly supercharge your app, you must integrate advanced AI models (like OpenAI’s GPT-4o, Claude 3.5 Sonnet, or Gemini) directly into your workflows. You can add AI to your app without coding to handle tasks like:

- Automated Summarization: Summarize meeting notes or long documents.

- Voice Translation: Use AI text-to-speech tools for accessibility.

- Predictive Analytics: Use historical data to forecast trends.

Step 5: Validation, Security, and Launch Before going live, thorough testing is crucial. Check for cross-browser compatibility issues and ensure your database permissions are secure. Once confident, use zero-downtime deployment to launch. Post-launch, you can monitor performance and iterate based on user feedback.

Deep Dive: Industry-Specific Use Cases

The power of SDE-level no-code is best understood through specific applications. Here is how different industries are utilizing Imagine.bo:

1. SaaS and Micro-SaaS

The barrier to entry for software entrepreneurs has never been lower. Founders are now building subscription-based apps that solve niche problems.

- Example: A micro-SaaS built in 48 hours that helps freelance writers generate SEO-optimized outlines. The app includes a payment gateway (Stripe), a user dashboard, and an AI generation engine.

- Strategy: Use generative SEO strategies to drive organic traffic to your new tool.

2. E-Commerce and Retail

Retailers are moving beyond standard Shopify templates to build custom operations tools.

- Example: A custom supply chain tracker that alerts store managers when stock is low.

- Example: An online store built with AI that uses computer vision to recommend products based on photos uploaded by customers.

3. Internal Business Tools

Companies are replacing disjointed spreadsheets with unified AI-powered internal tools.

- Example: A logistics company built a driver safety app where drivers log incidents via voice, and the AI automatically categorizes the severity and notifies HR.

- Example: Transforming manual data entry into an automated workflow that scrapes leads and populates a CRM.

4. Education and Training

Educators are becoming “citizen developers,” launching Learning Management Systems (LMS) tailored to their specific curriculum.

- Example: An app that generates custom quizzes and lesson plans based on the student’s previous test performance, adapting in real-time.

Strategic Outcomes: Speed, Scalability, and Cost Savings

Leveraging an SDE-level AI platform offers founders a competitive edge that traditional development cannot match.

- Unprecedented Speed to Market: AI-powered no-code tools reduce development time by up to 45% and the time needed to document functionality by 50%. This allows founders to move from concept to a functional MVP in a matter of days or weeks.

- Massive Cost Savings: Hiring specialized developers or data scientists is expensive. Custom code vs. no-code analysis shows that AI enables small teams to build sophisticated applications for a fraction of the cost, allocating resources toward marketing and customer acquisition rather than technical overhead.

- Reliability and Enterprise Readiness: Because next-generation platforms follow professional engineering standards, the resulting apps are often enterprise-ready. They offer built-in role-based access, data governance, and compliance with standards like SOC2 or GDPR.

Monetization and Growth: From Build to Business

Building the app is only half the battle; the other half is growth. SDE-level platforms often come with built-in SEO tools and marketing integrations.

- SEO Optimization: You can use AI to ensure your app’s landing pages are optimized for search engines. Read more about SEO strategies for AI-built products.

- Marketing Automation: Integrate your app with email marketing tools to nurture leads. You can even build AI agents for marketing automation that automatically send personalized follow-ups based on user behavior within the app.

- Subscription Models: Implementation of payment gateways like Stripe is seamless, allowing you to monetize your AI SaaS tool with tiered subscription plans immediately upon launch.

Conclusion: Engineering Your Future

The era of technical intimidation is over. The “no-code revolution” has matured from simple visual building into SDE-level engineering. Founders no longer need to compromise between ease of use and professional quality. By adopting high-authority platforms like Imagine.bo, you can supercharge your app and add AI features without coding, building a scalable, secure, and professional-grade empire in record time.

The rise of the citizen developer means that creativity and problem-solving skills are now more valuable than rote memorization of syntax. The technology now handles the complex coding, but success still requires the “Five Cs”: Clarity of objective, the Courage to start, Confidence built through practice, human Creativity, and Critical Thinking when troubleshooting.

Are you ready to build something you can be proud of? The future of work is not about who can write the best code, but who can best direct the AI to build it. Start your journey with Imagine.bo today and turn your million-dollar idea into a production-ready reality.

Frequently Asked Questions (FAQs)

Q: What is “vibe-coding”? A: Vibe-coding is a modern approach to development where you describe the “vibe,” functionality, and style of your application in natural language (plain English), and the AI generates the corresponding code and interface for you. You can learn more about building apps with vibe coding here.

Q: Can I really scale an app built on Imagine.bo? A: Yes. Unlike early no-code tools that were essentially “wrappers” for spreadsheets, Imagine.bo builds apps on SDE-level engineering standards with robust scalable SaaS architecture, allowing them to handle high traffic and complex data just like a traditionally coded app.

Q: Do I own the code and data generated by the platform? A: Absolutely. One of the key advantages of SDE-level no-code platforms is end-to-end ownership. You are not locked into a “black box”; the platform generates standard code structures that secure your data and intellectual property.

Q: Is Imagine.bo suitable for people with zero technical experience? A: Yes. The workflow is designed for non-technical founders. If you can clearly define your idea, target audience, and desired outcome, the AI app builder handles the syntax, architecture, and deployment.

Q: How does security work on these platforms? A: Security is baked into the foundation. Features like user authentication (Google login, etc.), data encryption, and privacy controls are automatically integrated when the AI architects your application, ensuring you meet compliance standards immediately.

Q: Can I build mobile apps with this technology? A: Yes, many modern SDE-level platforms support mobile-first development, allowing you to deploy PWA (Progressive Web Apps) or native applications for iOS and Android directly from your project.

Q: What happens if I need a feature the AI doesn’t understand? A: This is where the “SDE-level” aspect shines. These platforms usually allow for “low-code” intervention, meaning you or a developer can inject snippets of custom code if absolutely necessary, preventing you from hitting the “no-code wall.” You can read more about no-code vs low-code differences here.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build