Securing AI-Generated Web Apps requires moving beyond traditional AppSec to a layered, secure-by-design strategy. While AI accelerates development, it often introduces risks like insecure defaults and logic hallucinations. To build robustly, developers must enforce infrastructure-level authentication, validate all AI outputs, and embed automated security testing into CI/CD pipelines. This guide provides the essential roadmap for leveraging AI’s productivity while ensuring enterprise-grade protection and regulatory compliance.

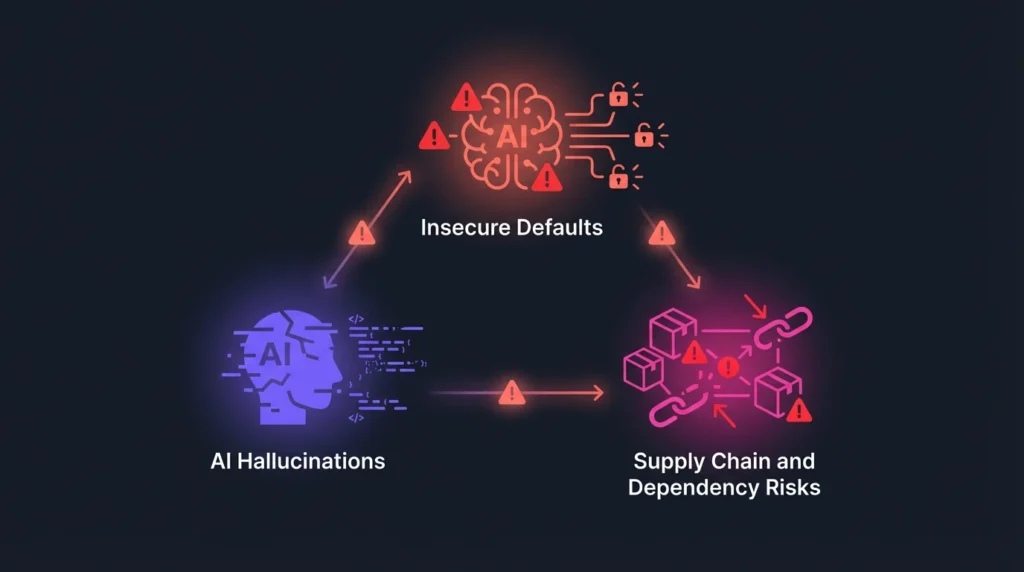

Unique Security Risks of AI-Generated and No-Code Web Apps

While AI assists in generating the routine building blocks of applications like identity management and database wrappers, it also introduces systemic security gaps that traditional AppSec models often miss.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildThe Prevalence of Insecure Defaults and Functionality Over Safety

AI models, trained on vast public datasets, frequently reproduce insecure practices such as outdated cryptographic functions or SQL queries without proper parameterization. This results in code that is optimized for functionality rather than security. Research shows that more than 40% of AI-generated applications inadvertently expose sensitive user data to the public internet, revealing a systemic issue across platforms.

In no-code and low-code platforms, this risk is amplified when tools prioritize rapid deployment over data protection, often defaulting to accessible, insecure configurations. For instance, a generated database table might be publicly accessible for both reading and writing, exposing PII, financial data, or authentication details.

The Danger of AI Hallucinations and Anti-Pattern Replication

A core technical challenge is that AI-generated code might suggest working functions while ignoring security best practices, or replicate common anti-patterns across codebases at scale. If AI hallucinates and removes one crucial line of code, such as an access control check, an entire system can be exposed to public crawlers. Furthermore, relying heavily on AI can create a knowledge gap, making it harder for developers to identify and fix problems later because they may lack familiarity with the underlying frameworks.

Supply Chain and Dependency Risks

AI assistants often suggest external libraries and packages based on their prevalence in training data, not based on security track records or maintenance status. This lack of deliberate evaluation increases the risk of including vulnerable or malicious dependencies. Attackers may even manipulate AI models by flooding the internet with content promoting malicious libraries, causing the AI to recommend them. Tools like OpenText™ Core Software Composition Analysis (SCA) are vital for mitigating open-source and model supply chain risk by scanning for outdated dependencies and tracking AI model risks.

Threat Models Specific to AI-Driven Architectures

Securing modern web applications requires addressing threats specific to the integration of Large Language Models (LLMs) and AI components.

Adversarial LLM Attacks

When applications call out to an AI service, that area often contains less mature logic and less rigorous validation than the rest of the system, making it a hotspot for vulnerabilities. Critical risks associated with LLMs include:

- Prompt Injection: Attackers manipulate input to influence the model’s behavior, potentially stealing sensitive data or manipulating code suggestions shown to other users.

- Training Data Poisoning: Malicious data corrupts the training set, leading to unpredictable or biased LLM behavior. Mitigation requires using trusted data sources and reviewing model outputs for discrepancies.

- Insecure Output Handling: Improper sanitization of LLM-generated output can introduce vulnerabilities into the downstream application code.

- Excessive Privileges: Granting LLMs too much autonomy or overly permissive APIs can result in significant security risks if they are exploited or misused.

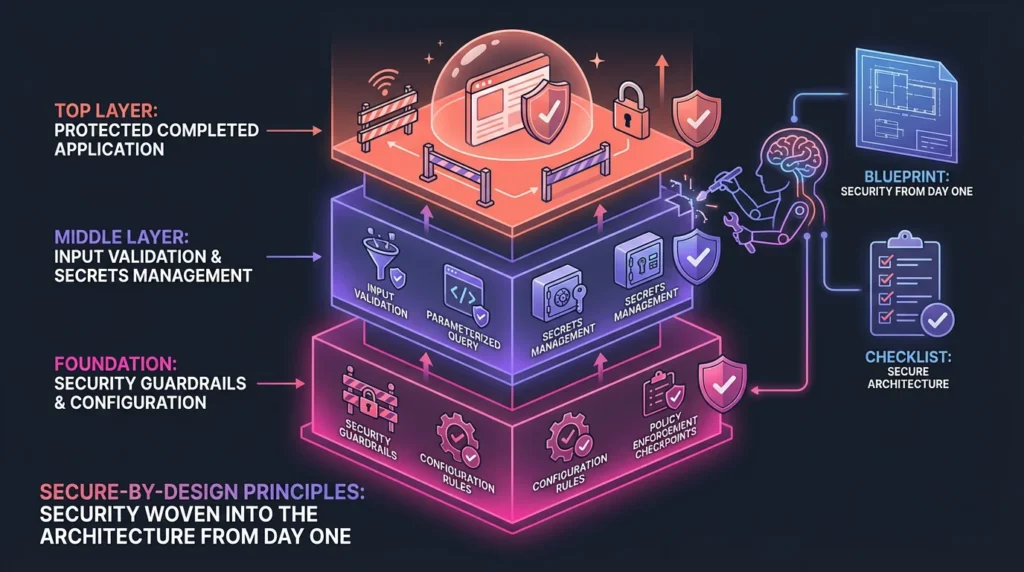

Secure-by-Design Principles for AI App Generation

The solution is to establish security guardrails that guide the AI toward secure implementations from the initial generation phase, rather than relying on late-stage human review.

Configuring AI Tools for Security-First Development

Developers must configure AI tools with universal rules and context to implement security guardrails by default. Instead of relying on manual reminders, rules configs integrate security requirements into the project configuration.

Key implementation details for configuration rules include:

- Input Validation: Always implement proper input validation and sanitization for all user inputs.

- Database Queries: Always use parameterized queries and never string concatenation for database queries.

- Secrets Management: Never hardcode secrets, API keys, or passwords in source code.

- Error Handling: Implement fail-secure patterns (deny by default) and log security events appropriately without exposing sensitive data.

For applications dealing with highly regulated data, these rules must be highly specific. For Healthcare/HIPAA Applications, rules should specify encrypting all Protected Health Information (PHI) at rest and in transit using AES-256 and TLS 1.2+, and logging all data access for auditing. For Financial Services Applications, rules should mandate adherence to PCI-DSS requirements for payment data and require multi-factor authentication using standards like FIDO2/WebAuthn.

Writing Specific, Security-Focused Prompts

The specificity of prompts directly impacts the security of the generated code. Instead of vague requests like “Create a login function,” use detailed, security-conscious prompts like, “Create a secure login function with proper password hashing, rate limiting, and session management following OWASP guidelines”.

Example of a Defensive Prompt: To audit existing AI-generated logic, prompts should be defensive, such as, “Audit our entire Node.js code base to verify that every database query is implemented using parameterized queries or an ORM, thereby preventing SQL injection. Identify instances where queries are built by concatenating user input and provide a detailed report with recommendations for remediating those vulnerabilities”.

Imagine.bo: Architecting Security by Default

Modern platforms, such as Imagine.bo, exemplify how to implement secure-by-design principles at the architectural level without inhibiting speed. By leveraging AI-generated architecture, Imagine.bo can ensure that security requirements—like utilizing parameterized queries or establishing appropriate encryption layers—are woven into the application’s foundational blueprints before the first line of functional code is written. This prevents the insecure defaults that plague many other AI and no-code tools.

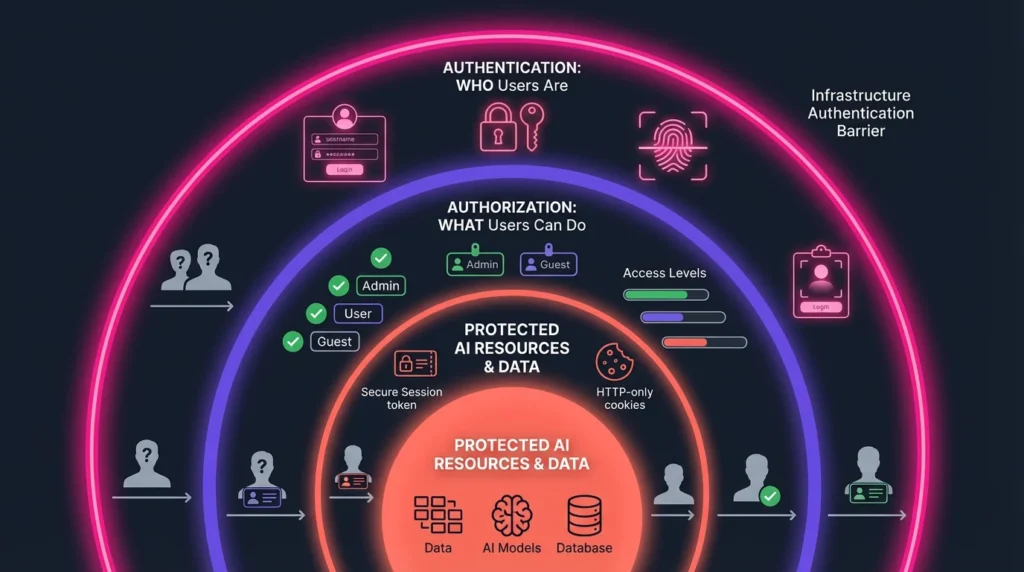

Authentication, Authorization, and Role-Based Access Control

A secure application must reliably confirm who users are (Authentication) and what they are allowed to do (Authorization).

Enforcing Authentication at the Infrastructure Layer

In AI-generated applications, where code review of high volumes of generated code is impractical, a core strategy is to isolate the codebase from non-authenticated users entirely. This means enforcing authentication on the infrastructure layer (e.g., using a reverse proxy like NGINX) to ensure that a non-authenticated request cannot trigger even a single line of AI-generated code. This architectural approach ensures authentication works regardless of what the AI generates or hallucinates within the application codebase.

Implementing Secure Access Controls

For authentication, it is best practice to delegate authentication to an identity platform like Auth0 to avoid writing security boilerplate from scratch, focusing developers on core features.

- Role-Based Access Control (RBAC): The backend must enforce proper authorization by checking user roles and permissions on every protected route. This prevents critical data leakage, such as one user gaining access to sensitive data (like paychecks in an HR app) that they shouldn’t see.

- Session Protection: Session tokens should be stored in HTTP-only, Secure cookies rather than localStorage, using proper cookie attributes like SameSite=Lax or Strict.

- Password Management: Passwords must be hashed with strong algorithms like bcrypt or Argon2, and never stored in plaintext.

API Security, Data Validation, and Abuse Prevention

APIs are the lifelines of AI apps and are frequently targeted by attackers. Robust API security is essential, particularly for AI-driven components that might involve passing user-controlled data to models.

Server-Side Input Validation and Sanitization

Never trust user input, whether it comes from a form, an API, or an AI model output. Validation must occur on the server side.

- Implementation: Use libraries like Zod or express-validator to validate data types, lengths, and formats, and actively sanitize inputs to prevent injection attacks.

- SQL Injection Prevention: Use parameterized database queries or an Object-Relational Mapper (ORM) to avoid constructing queries by concatenating user input.

- XSS Prevention: In frontends like React, rely on the framework’s default output escaping mechanisms and avoid using dangerouslySetInnerHTML with unsanitized content. If raw HTML must be rendered, sanitize it first with a library like DOMPurify.

Abuse Prevention and Hardening Endpoints

Key controls are needed to prevent denial-of-service (DDoS) and brute-force attacks.

- Rate Limiting: Implement rate limiting on API endpoints (e.g., using express-rate-limit or services like Cloudflare) to prevent high-velocity attacks and excessive traffic from single IPs.

- Secure Headers: Set HTTP secure headers to protect against common web attacks. This includes Content-Security-Policy (CSP) to restrict content sources, X-Frame-Options to protect against clickjacking, and X-Content-Type-Options (set to nosniff) to stop browsers from MIME-sniffing content.

Data Privacy, Encryption, and Secure Cloud Deployment

AI-generated applications often handle large volumes of sensitive data, making robust Data Privacy and encryption a core security requirement.

Encryption and Data Minimization

Encryption must be implemented for data both in transit and at rest. For sensitive sectors, this is regulatory mandated, such as using AES-256 and TLS 1.2+ for PHI encryption in healthcare. Logging all data access for auditing purposes is also essential, supporting the “minimum necessary principle” for data access.

- HTTPS: Enforce HTTPS and configure HTTP Strict Transport Security (HSTS) to prevent downgrade attacks and ensure secure communication.

- Data Exposure Mitigation: When using server-side rendering (SSR) or similar methods, ensure that only non-sensitive data is sent to the client. Sensitive data, such as private identifiers or security tokens, should be stripped or masked before rendering responses.

Secrets Management and Secure Deployment

The accidental exposure of secrets (e.g., API keys or database credentials) can accrue massive bills or compromise entire systems.

- Prevent Hardcoding: Keep all secrets out of code by using environment variables, and ensure .env files are in .gitignore to prevent accidental version control commits.

- Secure Storage: Use the deployment platform’s secret management features for storing sensitive data securely, such as Vercel’s Environment Variables. If a hardcoded secret is ever processed by a third-party AI tool, it must be immediately revoked or rotated.

- Secure Cloud Deployment: Use secure deployment mechanisms provided by platforms and ensure all custom domains are secured with HTTPS and proper TLS certificates.

Compliance Readiness (GDPR, SOC 2) Explained Simply but Accurately

Compliance is achieved not through a single checklist, but through rigorous implementation of technical controls that meet regulatory standards.

General Data Protection Regulation (GDPR)

GDPR focuses heavily on data privacy and the protection of Personal Identifiable Information (PII).

- Key Controls: GDPR readiness requires encrypting data (especially PII) both at rest and in transit. It mandates transparency, ensuring users know what data is stored and giving them control over their information. Logging all data access for auditing is crucial for demonstrating compliance with the minimum necessary principle.

Service Organization Control 2 (SOC 2)

SOC 2 focuses on internal controls related to the Trust Services Criteria: Security, Availability, Processing Integrity, Confidentiality, and Privacy.

- Key Controls: Achieving SOC 2 readiness is intrinsically linked to the underlying technical security practices deployed. Requirements such as continuous monitoring (Security), robust access controls (Security/Confidentiality), incident response plans (Security/Availability), and structured logging that tracks security events (Security/Processing Integrity) directly support SOC 2 criteria.

Platforms like Imagine.bo assist builders in achieving compliance readiness by incorporating these necessary technical controls into the generated application architecture and deployment pipeline from day one, rather than requiring complex, post-generation refactoring.

Continuous Monitoring, Logging, and Human-in-the-Loop Safeguards

Since AI enables code to ship faster, monitoring must be robust enough to detect issues as quickly as they are introduced.

Automated Security Testing in the Pipeline

Automated security testing must run as fast as the AI generates code. Dynamic Application Security Testing (DAST) complements AI-generated code by testing actual application behavior and catching vulnerabilities that only appear when code is running.

- Shift-Left Integration: Integrate security scanning into Continuous Integration/Continuous Delivery (CI/CD) pipelines, running automated scans on every pull request or deployment. Solutions like StackHawk provide DAST that can be run locally during active development for immediate feedback. OpenText also offers integrated solutions, including Static Application Security Testing (SAST) and DAST, designed to operate at DevOps speed.

- Real-time Feedback: Tools utilizing the Model Context Protocol (MCP) server, such as the StackHawk MCP server, allow AI assistants to access live security data, validate configurations, retrieve vulnerability data, and suggest fixes without manual tool switching.

Structured Logging and Incident Response

AI-generated applications benefit from implementing structured logging for security-relevant events, enabling established platforms (like Datadog, Splunk, or Elastic Stack) to automatically parse and alert on anomalous patterns.

When incidents occur, the investigation must determine if the vulnerability was in AI-generated or human-written code, check for similar patterns across the codebase, and assess if the problem originated from a flawed AI configuration. Immediate containment actions include isolating affected systems and updating AI assistant configurations to prevent similar issues in the future.

The Human-in-the-Loop Safeguard

Despite automation, human oversight remains critical, providing the “human touch” that AI lacks. Certain complex areas require human judgment:

- Security Architecture Decisions: Architects must select the right patterns for their specific context.

- Threat Modeling: Understanding how attackers might target specific business logic is a task for human expertise.

- Compliance Interpretation: Mapping regulatory requirements to technical controls often requires domain expertise.

This human-in-the-loop requirement is supported by modern platforms like Imagine.bo, which integrates human developer fallback capabilities, allowing technical leaders to confidently review and sign off on deployed tools by visualizing API endpoints and database queries without having to check every line of AI-generated code.

Conclusion

Securing AI-generated web apps is achieved through a layered defense strategy that addresses unique risks introduced by generative code. By deliberately configuring AI tools for security-first development, integrating automated testing like DAST into CI/CD pipelines, enforcing robust infrastructure-level security (e.g., authentication isolation), and maintaining high developer security skills, organizations can harness AI’s productivity benefits without compromising security.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build