We are living through a quiet revolution. It used to be that if you wanted to build software capable of making complex decisions like predicting user behavior, automating financial approvals, or generating marketing assets you needed a dedicated team of engineers, a massive budget, and months of development time.

Today? You just need a laptop, a strong internet connection, and a weekend.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildNo-code platforms have fundamentally changed who gets to build the future. Founders, marketers, interior designers, and operators are now launching AI-powered products without writing a single line of code. That accessibility is incredibly powerful. It democratizes innovation in a way we haven’t seen since the early days of the internet. We are witnessing the future of building software shift from a technical elite to a creative majority.

Ethical AI isn’t just a concern for Google, OpenAI, or massive research labs. It matters more in the no-code space because the abstraction the very thing that makes no-code so fast can hide serious risks.

If you are building AI-powered workflows, apps, or decision systems, ethical design cannot be an afterthought. It has to be the foundation. This guide is going to break down exactly how to build ethical AI into your no-code projects. No high-level theory, just practical steps on how to spot risks, design for trust, and build systems that are fair, transparent, and secure.

What “Ethical AI” Actually Means for No-Code Builders

When we hear “Ethical AI,” it’s easy to zone out. It sounds like a compliance seminar or a legal hurdle. But for a no-code builder, ethical AI is actually about product quality. An unethical product is a bad product one that users won’t trust, one that creates legal liabilities, and one that eventually fails in the market.

In practice, ethical AI usually boils down to four non-negotiable pillars:

Fairness: The “Who Does This Hurt?” Test

Fairness is about avoiding unjust or discriminatory outcomes. Does your app work as well for a user in rural India as it does for a user in downtown San Francisco? Does your automated loan approval workflow reject people based on proxies for race or gender? In no-code, where we often plug in pre-made models, fairness means ensuring the tool doesn’t carry invisible baggage that hurts specific groups of people.

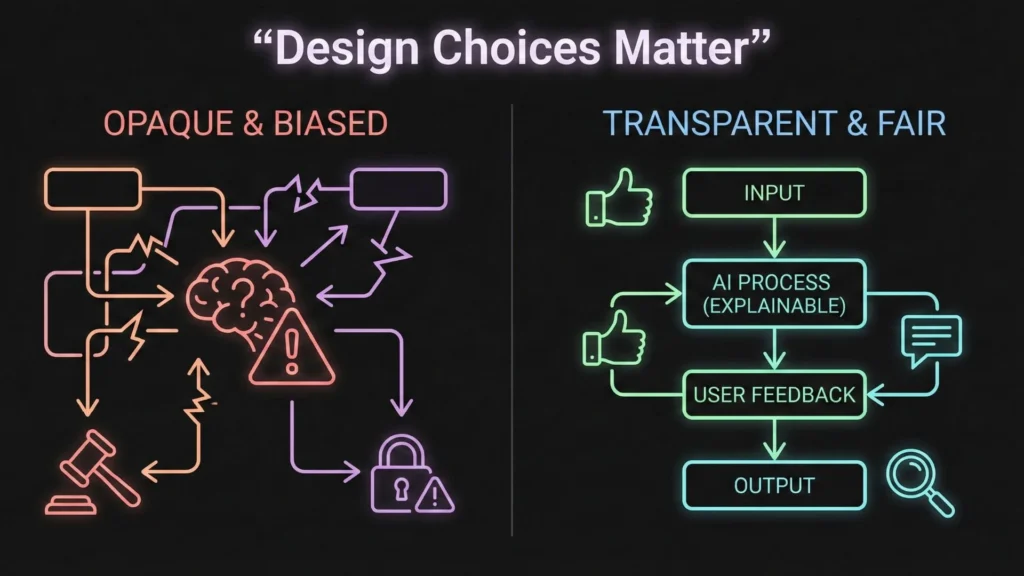

Transparency: The “Glass Box” Principle

Can you explain why the AI did what it did? Transparency doesn’t mean showing users the raw code (which doesn’t exist in no-code anyway). It means making the decision-making process understandable. If your app recommends a product, does it tell the user why? If it rejects an application, is the reason clear?

Accountability: The “The Buck Stops Here” Rule

Who is responsible when the AI messes up? (And it will mess up). In a no-code environment, you cannot blame the platform provider. If you build the workflow, you own the outcome. This brings up the crucial issue of governance for citizen developer platforms, ensuring that even non-technical builders have protocols in place to manage errors.

Privacy: The “Data Respect” Standard

This goes beyond just encryption. It’s about consent. Are you collecting data just because you can, or because you need it? Are you protecting user data from being fed back into public models without their permission? Privacy is the currency of trust.

Why No-Code Changes the Risk Profile

Here is the uncomfortable truth: No-code platforms can unknowingly increase the likelihood of unintentional harm.

This isn’t because no-code builders are malicious; it’s because the tools are designed to remove friction. But sometimes, friction is good. Friction forces us to slow down and ask, “Wait, should we actually do this?”

When you write code from scratch, you see the data processing pipeline. You see the logic gates. In no-code, you drag a block that says “Analyze Sentiment” or “Generate Image.” You don’t see the training data behind that block. You don’t see the bias weights.

The “Black Box” Problem

Many no-code AI systems function as total black boxes. Inputs go in, magic happens, and outputs come out. This is fine for generating a funny poem, but it’s dangerous for medical advice, hiring decisions, or financial scoring. If you can’t trace the logic, you can’t fix it when it breaks. If a user asks, “Why was my account flagged?” and your only answer is “The AI said so,” you have lost that customer.

Blind Trust in Pre-Trained Models

Most no-code tools rely on APIs from major providers (like OpenAI, Anthropic, or Stable Diffusion). These models are powerful, but they are not neutral. They inherit the biases of the internet.

For example, an image generation tool trained mostly on Western art history might struggle to generate accurate cultural depictions of non-Western festivals. If you are building a global travel app, this is a product failure, not just an ethical one. This requires rigorous AI software testing without coding to identify these gaps before you launch.

Unique Ethical Challenges You Will Face

Let’s get specific. If you are building an app today, here are the three traps you are most likely to fall into.

Trap #1: Bias Amplification

Bias isn’t just about racism or sexism (though those are huge issues); it’s also about economic and cultural bias.

Imagine you build a no-code tool that helps realtors write property descriptions. The AI model you use might have been trained on luxury listing data. As a result, it might describe a modest apartment using over-the-top, grandeur language that feels fake or misleading.

- The Fix: You need to test your model on “edge cases” inputs that fall outside the “average” user to see if the AI hallucinates or defaults to stereotypes.

Trap #2: The “Set It and Forget It” Mentality

No-code creates a feeling of “done.” Once the workflow is active, we tend to move on to the next feature. But AI drifts. User behaviors change. A model that was fair yesterday might be exploited tomorrow.

- The Fix: You need continuous monitoring. You need to review a sample of your AI’s outputs every week to ensure quality hasn’t degraded.

Trap #3: Misuse at Scale

Because no-code lowers the barrier to entry, it also lowers the barrier to misuse. You might build a tool for generating marketing copy, but users might repurpose it to generate spam or misinformation.

- The Fix: You need guardrails. Implementing no-code app security best practices helps you create limits on how your tool can be used, such as rate limiting or content filters to prevent abuse.

How AI Impacts Your Stakeholders

When we build, we often think about the “Admin” (us) and the “User.” But the ecosystem is bigger than that.

The Builders (You)

No-code gives you leverage. A single person can now do the work of a ten-person agency. But this means you also take on the ethical liability of a ten-person agency. You are no longer just a “designer” or “founder”; you are an AI ethics officer. You have to verify the tools you use.

The End Users

Users crave convenience, but they fear the unknown. They are increasingly savvy about data rights. If your app feels “creepy” like it knows too much about them without asking they will leave. Conversely, clear communication about how you use AI can actually be a competitive advantage. It shows you respect them.

Society at Large

This sounds grand, but it’s true. Does your tool reinforce inequality? For example, if you build a no-code recruiting app that automatically filters resumes based on “cultural fit” keywords, you might be accidentally filtering out diverse candidates who use different terminology.

When building Minimum Viable Products, it is tempting to cut corners on these considerations to launch faster. However, ignoring societal impact at the MVP stage often bakes these problems into the foundation of your company.

Practical Guide: Bias Detection and Mitigation

Okay, enough theory. How do you actually do this in a no-code environment?

Step 1: Audit Your Data (Even if You Didn’t Collect It)

If you are fine-tuning a model or using a database to prompt an AI (like RAG – Retrieval Augmented Generation), look at your data source.

- Ask: Who is missing?

- Example: If you are building a customer support bot and you only feed it chat logs from your US customers, it’s going to fail your European or Asian customers. It might even consider their politeness norms as “confusion.”

- Action: Actively seek out diverse data to “teach” your no-code system.

Step 2: The “Counterfactual” Test

Before launching, run tests where you change only one variable (like gender, location, or name) and see if the AI’s output changes drastically.

- If changing a name from “John” to “Priya” changes the AI’s tone from professional to helpful-but-condescending, you have a bias problem in your prompt or model.

- Mitigation: Adjust your system instructions (system prompts) to explicitly forbid these biases. For example: “Maintain a neutral, professional executive tone regardless of user demographics.”

Step 3: Human-in-the-Loop for High Stakes

Never let a no-code automation make a high-stakes decision (hiring, firing, lending, medical) without a human review step.

You can implement this by using intelligent workflow automation tools that pause a sequence for manual approval. Create a workflow where the AI assigns a “score” or a “draft,” but the final “Send” or “Approve” action must be clicked by a human.

Data Privacy and Security: The Boring Stuff That Saves You

You might think, “I’m using a no-code tool, so they handle security, right?” Wrong.

The platform handles infrastructure security. You handle data logic security. Regulations like GDPR and CCPA apply to you, the data controller.

Understanding Your Obligations

- Data Minimization: Don’t collect data you don’t need. If you don’t need a user’s phone number for the AI to work, don’t ask for it.

- Right to be Forgotten: If a user asks you to delete their data, can you? In many no-code setups, data is scattered across Airtable, Zapier, and OpenAI. You need a central map of where user data lives so you can delete it if asked.

Anonymization is Key

Before you send data to an AI API (like OpenAI via Make or Zapier), strip the Personally Identifiable Information (PII).

- Action: Use a no-code formatter step to replace names with IDs (e.g., “User 1234”) before sending the text to the AI for analysis. Re-attach the name only after the data comes back. This ensures the AI provider never sees your customers’ real names.

Navigating this legal landscape can be tricky for non-lawyers, so referencing a guide on GDPR compliance in no-code tools is essential to ensure you aren’t accidentally breaking the law while building your app.

Transparency: How to Build Trust

Transparency is the difference between a “creepy” app and a “magical” app.

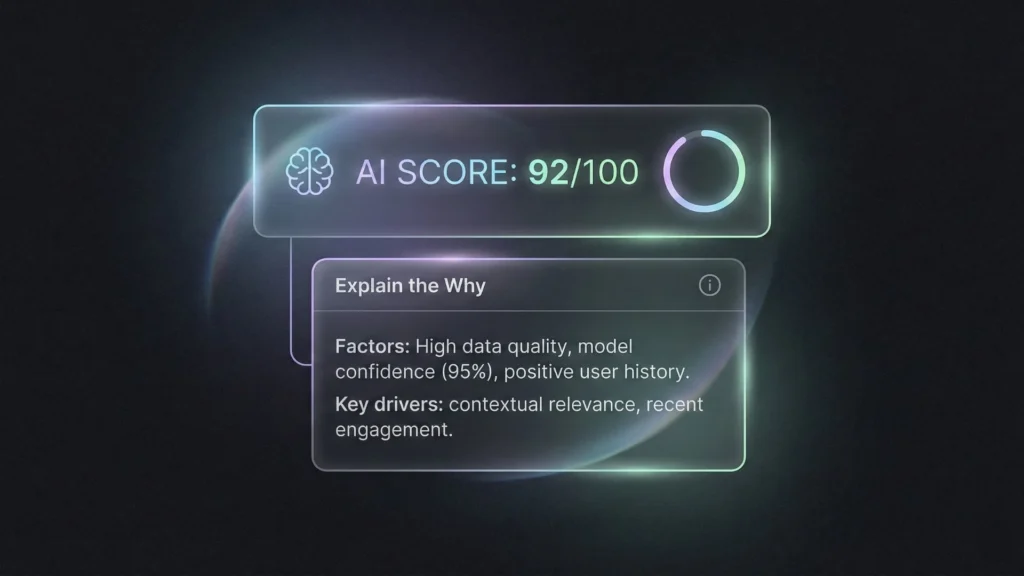

Explainability

Users trust systems they understand.

- Bad UX: App shows a score of 45/100.

- Good UX: App shows a score of 45/100. “We scored this low because the document was missing a clear introduction and the tone was inconsistent.”

- How to do it: Ask the AI to generate the reasoning alongside the result, and display that reasoning to the user.

Communicate Limitations

AI is probabilistic it plays the odds. It is not a calculator.

- Be Honest: Put a disclaimer near your AI features: “This content is AI-generated and may contain errors. Please verify important details.”

- This doesn’t scare users away; it makes them trust you more because you aren’t over-promising.

This is especially critical when dealing with customer service. If you are debating AI chatbot vs. human support, the ethical choice is often a hybrid approach where the bot explicitly identifies itself as AI and offers an easy off-ramp to a human agent.

Responsible AI Practices for Your Team

Even if you are a team of one, you need a protocol.

1. Define Boundaries Early

Write down what your AI will not do.

- “We will not use AI to generate legal contracts without lawyer review.”

- “We will not use AI to mimic specific public figures.” Having these “Thou Shalt Nots” makes decision-making easier later.

2. Version Control for Prompts

Treat your prompts like code. If you change the system prompt to make the AI “more creative,” and suddenly it starts hallucinating facts, you need to be able to roll back to the previous version. Keep a log of your prompt changes.

3. Feedback Loops

Build a “Thumbs Up / Thumbs Down” mechanism into your user interface. If a user flags an output as bad, that data should go straight to you. This is your early warning system for ethical drift.

Eventually, if your app succeeds, you will face the challenge of growth. When you scale no-code AI apps to production, your ethical protocols must scale with you. A manual review process that works for 10 users will break at 10,000 users, so you must plan for automated monitoring early.

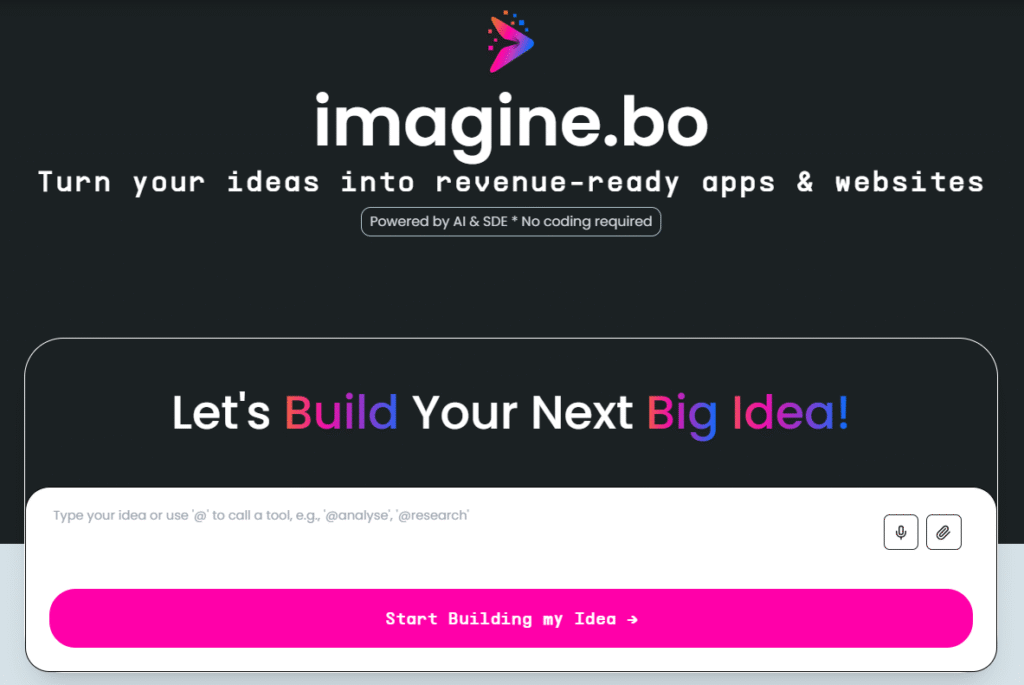

The Role of the Platform: Why Imagine.bo Matters

This brings us to a critical point: The tools you choose dictate the ethics you can enforce.

In the early days of no-code, we hacked things together using fragile connections between disparate apps. That fragmentation made governance a nightmare. You couldn’t guarantee security when data was bouncing between five different cheap tools.

This is where modern, production-grade platforms like Imagine.bo are shifting the paradigm.

Imagine.bo isn’t just about “building without code”; it’s about building responsibly with architecture. It approaches no-code development with the standards usually reserved for high-end engineering teams.

Why Imagine.bo Fits the Ethical Builder

- Unified Architecture: Instead of stitching together random APIs, Imagine.bo handles backend logic, data flows, and AI integration within a single, secure environment. This reduces the “surface area” for data leaks.

- Audit-Ready Infrastructure: When you build on Imagine.bo, you aren’t just scripting; you are building on a structure that supports logging and traceability. If you need to know why a decision was made or where data went, the platform supports that visibility.

- Security First: By handling secure data handling and user roles natively, Imagine.bo allows founders to focus on the product without worrying that they accidentally left a database port open to the public.

Understanding why choose Imagine.bo goes beyond feature lists; it’s about choosing a partner that handles the heavy lifting of security and compliance so you can focus on ethical creativity. You can start building your secure app on Imagine.bo today to see how built-in governance makes ethical development effortless.

When ethical considerations like security protocols and data structuring are embedded into the platform itself rather than bolted on later, no-code becomes safer. It allows you to move at the speed of AI, but with the safety belt of enterprise engineering.

Case Studies: The Good, The Bad, and The Ugly

To make this real, let’s look at two hypothetical scenarios.

The Failure: The “Blind” Hiring Bot

- The Setup: A startup builds a no-code workflow to scan PDF resumes and rank candidates. They use a standard GPT model and prompt it to “Find the best candidates.”

- The Mistake: They didn’t realize the model has a bias toward “prestige” universities because of its training data. It starts down-ranking incredible candidates from state schools or bootcamps.

- The Fallout: The company realizes 6 months later that their hiring pipeline has become completely homogenous. They face reputational damage and have to scrap the system.

- The Lesson: Lack of testing and “blind trust” in the model’s definition of “best” caused real harm.

The Success: The “Transparent” Interior Design Helper

- The Setup: An interior design firm (perhaps utilizing a customized AI workflow) builds a tool that suggests furniture layouts based on user photos.

- The Ethical Design: They know AI hallucinates measurements. So, they build the UI to explicitly say: “These are creative suggestions, not construction blueprints. Please measure before buying.”

- The Data: They ensure the training images include homes of all sizes, not just mansions, so the tool is useful for apartment dwellers too.

- The Result: Users love the tool because it manages expectations. They use it for inspiration (its intended purpose) rather than accuracy (its weakness).

- The Lesson: Clear communication and understanding limitations builds trust.

The Future of Ethical AI in No-Code

We are moving past the “Wild West” era of no-code AI. The next phase is about maturity.

We can expect greater regulatory attention on no-code apps. If you are building tools that affect people’s lives, you will eventually face the same scrutiny as traditional software companies. But this isn’t something to fear it’s an opportunity.

Platforms that bake ethics into their defaults like Imagine.bo will lead this next wave. We will see more “fairness tools” built right into the no-code editors, flagging potential bias before you even hit publish.

Final Thoughts

No-code AI is not inherently ethical or unethical. It is a mirror. It reflects the choices of the people who use it and the platforms that shape it.

If you are a builder, you have a choice. You can build fast and break things (including user trust), or you can build intentionally. By understanding the risks, designing for transparency, and choosing robust tools that prioritize structure and security, you can build products that are not only innovative but sustainable.

Ethical AI isn’t a blocker to your speed. It’s the only way to make sure that what you build actually lasts.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build