Building and modernizing AI applications is no longer just a technical upgrade. It is a strategic decision that shapes how products scale, adapt, and stay competitive. This guide breaks down how building AI applications are designed today, how legacy systems can be modernized without starting from scratch, and what truly matters when moving from experimentation to real-world deployment. You will learn practical approaches to architecture, workflows, scalability, and security, with a clear focus on building systems that are reliable, flexible, and ready for growth rather than just impressive demos.

Understanding the Modern AI Application Landscape

Key Strategies for Building AI Applications

The first step in building or modernizing applications is understanding how the current era of intelligent software differs from traditional development.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildDefining Modern AI Applications vs. Traditional Software

Traditional software development focused on migrating static business processes to digital systems, often resulting in monolithic architectures. These systems perform predefined, task-centric work.

Modern AI applications are fundamentally different. They are defined by their ability to integrate intelligence, scale rapidly, and adapt autonomously.

| Feature | Traditional Software (Legacy) | Modern AI Applications (AI-First) |

| Architecture | Monolithic, tightly coupled, and difficult to update | Cloud-native, microservices-based, enabling independent scaling |

| Core Function | Executing repetitive, rule-based tasks | Driving intelligent automation, personalization, and predictive decision-making |

| Data Reliance | Data silos, batch processing, limited real-time insights | Unified, cloud-based data platforms supporting real-time data flows |

| Key Enabler | Manual processes and structured rules | Agentic AI systems that interpret goals, make decisions, and adapt to feedback |

The shift is from digitalization to the era of intelligent automation, demanding speed, scalability, and consistent innovation.

The Dual Imperative: Why Modernization Is Required for AI

Many organizations are eager to embed AI, but their outdated IT landscapes pose significant barriers. Legacy systems often feel like anchors, holding businesses back due to hidden dependencies, outdated code, poor documentation, and high maintenance costs.

This creates a dual imperative for leaders:

- Use AI to modernize faster: AI significantly accelerates the difficult, manual tasks of transformation. Generative AI can accelerate timelines by 40% to 50% and cut technical debt–related costs by about 40%.

- Modernize to leverage AI effectively: Agentic AI cannot thrive on legacy foundations. Modernizing to flexible, cloud-ready architectures is essential to support real-time, AI-driven workflows.

By moving critical systems into modern, scalable environments, organizations address mounting technical debt and position themselves for long-term growth and competitiveness.

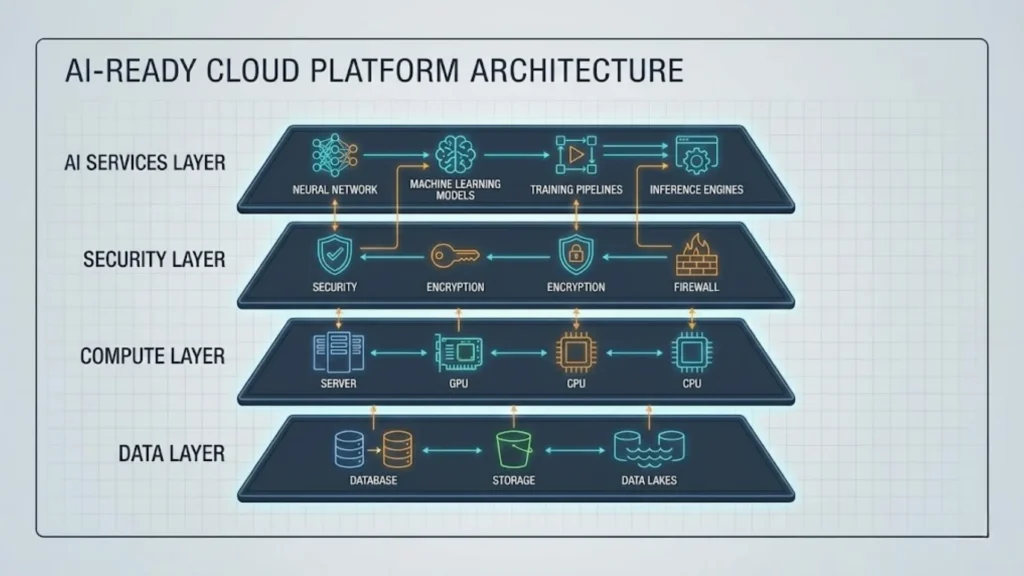

Architecture and Foundation: The AI-Ready Cloud Platform

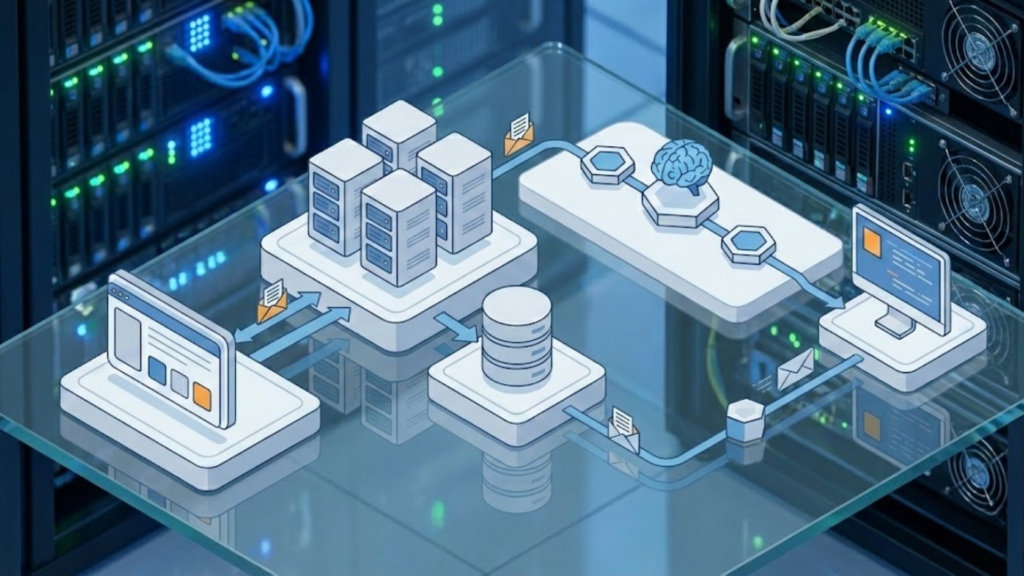

The foundation for any modern AI application must be built on cloud-native principles to achieve the necessary speed, scalability, and security. A modern, serverless SaaS architecture needs three core pillars: an agile architecture, unified data infrastructure, and robust cloud deployment.

Embracing Cloud-Native Architecture

Modern applications rely on architectures that support rapid integration and adaptable behavior envelopes.

- Microservices and Containers: By leveraging microservices and containers, organizations break free from the constraints of monolithic systems. This allows each part of the system to be updated, replaced, or scaled independently without affecting the others.

- Agility and Speed: A microservices architecture enables agility and flexibility, allowing new features (like an LLM-powered recommendation engine) to be deployed faster and at a lower cost. Implementing DevOps and automated CI/CD pipelines further accelerates time-to-market.

Modernizing Data Workloads and Pipelines for Insight

Data is the crucial building block that drives the reliability of AI systems. Legacy data systems often hold data in silos or older databases, preventing AI tools from accessing necessary information at the required speed and scale.

Modernizing data workloads involves:

- Migrating to Cloud-Based Data Platforms: This ensures data is accessible, secure, and ready for advanced data visualization and analytics.

- Unifying Data: Establishing a secure, open foundation for enterprise AI allows for a unified view of customer data and ensures data is easily accessible to feed AI models.

- Governance: Investing in high-quality, well-governed data is fundamental for practical model training and trustworthy insights.

Deploying on a Secure, Agile Cloud Foundation

A secure, scalable cloud environment is non-negotiable for AI-powered growth.

- Scalability and Cost Efficiency: Migrating core workloads to a scalable cloud foundation reduces cost and complexity. Studies show significant ROI when services are moved to modern cloud infrastructure.

- Security Built for the AI Era: Security must be embedded at every stage of modernization. As AI adoption grows, new risks like prompt injection require proactive governance. Read more about securing AI-generated web apps to stay ahead of these threats.

- Performance: Hybrid cloud environments and specific infrastructure solutions enhance integration and performance, optimizing IT operations.

The Modernization Framework: Building on Brownfield Systems

Modernizing a legacy application involves moving from a slow, manual, and risky process to a structured, AI-accelerated journey. Successful AI-powered modernization follows a staged, strategic approach.

Step 1: Architectural Discovery and Analysis (Conception)

The initial phase is discovery, where the current system is assessed to understand business vision, existing processes, and technical debt. Since many older systems lack detailed documentation, this is where AI provides immense value.

- Analyzing Legacy Code: AI agents rapidly scan existing codebases and data stores, extracting business requirements, surfacing hidden dependencies, and identifying improvement opportunities.

- Mapping Dependencies: AI algorithms combine static analysis with dynamic runtime insights to provide detailed views of the operational behavior. This is crucial for identifying architectural drift before changes are made.

- Proposing Roadmaps: Based on the analysis, AI can propose a modernization roadmap and architectural scaffolding for the next-gen system. You can even use these tools to validate startup ideas before fully committing to the migration.

Step 2: AI-Augmented Refactoring and Code Translation

This phase focuses on lean, incremental development, often using patterns like Strangler to gradually replace legacy systems. AI tools radically accelerate the execution of this work.

- Automated Code Translation and Generation: Generative AI can automatically rewrite legacy code from older languages like COBOL or Visual Basic into modern tech stacks.

- Context-Aware Refactoring: Generic AI coding tools excel at writing code snippets but often lack the architectural context needed for complex brownfield environments. This differs from risky vibe coding, as advanced systems provide architectural intelligence, supplying AI assistants with precise, contextual prompts about which dependencies must be preserved.

- Automated Testing: AI automates quality assurance by generating regression and end-to-end test cases, increasing test coverage while dramatically reducing manual effort.

Step 3: Continuous Evolution and Strategic Decision Making

Modernization is an ongoing process, not a destination.

- Performance Monitoring: AI algorithms analyze system behaviors to identify bottlenecks, boost performance, and uncover fixes for critical bugs.

- Strategic Prioritization: AI helps human experts by identifying high-risk areas and providing data-driven recommendations on how to prioritize complex transformations.

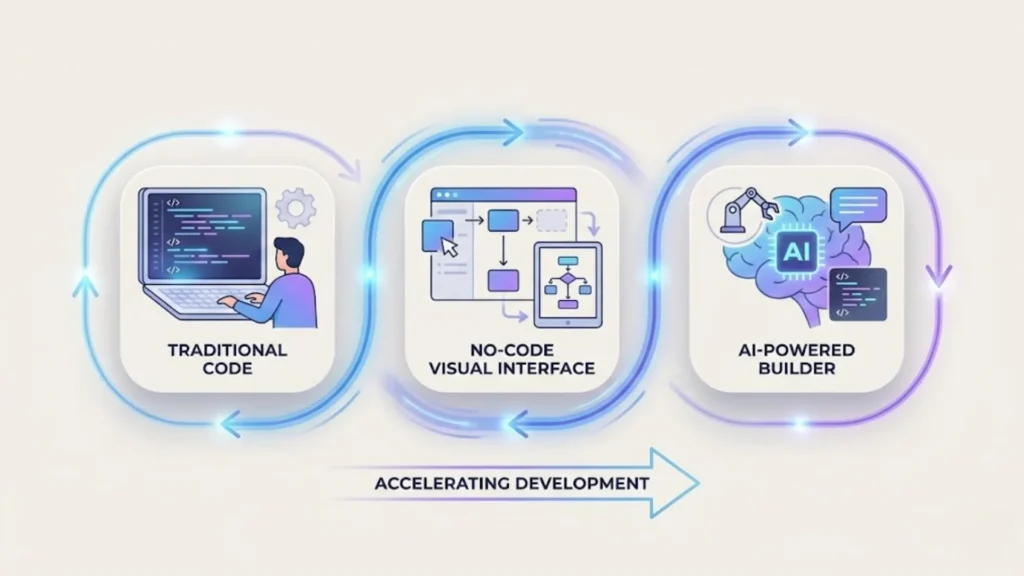

Accelerating Development: Traditional, No-Code, and AI-Powered Builders

For founders and product managers, choosing the right development approach is crucial for maximizing speed and minimizing costs.

The Limits of Manual Development and Generic AI Tools

Traditionally, software development was a slow, resource-heavy process requiring deep technical expertise. While generic AI coding tools (like large language models) have boosted productivity, they are not a complete solution for complex application creation or modernization.

A critical pitfall in brownfield modernization is that generic AI tools often operate without architectural context. They might optimize a single component but inadvertently destabilize the broader system.

The Rise of AI-First, No-Code Application Builders

The most current evolution in the software development lifecycle (SDLC) is the rise of platforms that fully automate the technical and architectural complexity that traditionally stalled projects. These AI app builders provide the architectural intelligence necessary to build or modernize scalable AI applications without deep coding expertise.

Imagine.bo is an example of a modern AI no-code app builder designed specifically for this new paradigm. It elevates the role of the product manager or builder from technician to conductor by handling complexity autonomously.

With a platform like Imagine.bo, users can define and deploy complex, scalable AI applications by:

- Describing application requirements in plain English: Users articulate their needs and business logic in natural language. You can build an app by describing it, automating the requirement analysis phase.

- Automatically generating architecture: The platform leverages AI to propose and generate the underlying microservices architecture and data pipelines.

- Customizing visually: Teams can refine and customize the generated application interface using visual tools.

- Deploying securely: The generated applications are deployed securely onto robust cloud environments.

- Relying on real human support: Recognizing that AI is a partner, not a replacement, the platform ensures that human experts can step in to manage complex, domain-specific challenges.

Addressing Real-World Constraints, Security, and Compliance

While AI accelerates development, success requires navigating real-world constraints, particularly concerning security, compliance, and the human element.

Security and Compliance in AI Applications

In regulated industries, compliance with standards such as HIPAA or SOX is non-negotiable.

- Compliance by Design: Compliance knowledge must be built into the project from the beginning. Learn more about compliance and protection to ensure your AI apps meet industry standards.

- Protecting Sensitive Data: Solutions handling sensitive data require strong protection mechanisms against leaks and misuse.

Performance and Scalability: The New ROI Equation

The modern ROI of application development values time, talent, and transformation equally.

- Scalable Productivity: Modern architecture and AI-augmented tools allow teams to achieve scalable productivity without increasing headcount.

- Faster Execution: AI-assisted code generation and intelligent testing drastically shorten the lead time from conception to solution delivery, reducing project costs.

The Human in the Loop

Despite the power of AI, human involvement remains vital. Humans must review the accuracy of AI-generated code for business-critical functions and manage the nuances of organizational challenges. Successfully adopting AI requires fostering a culture of experimentation and continuous learning.

Conclusion

The complexity of migrating legacy systems often feels like trying to repair a running airplane mid-flight while reading blueprints written in a forgotten language. AI-powered modernization provides the equivalent of a digital partner that can instantly analyze the plane’s every part, generate new, functional components in real-time, and ensure those components integrate perfectly, allowing the human crew to focus solely on setting the new flight path.

By adopting AI-powered platforms and focusing on architectural integrity, organizations can transform their legacy systems into platforms ready for continuous innovation and sustainable business growth.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build