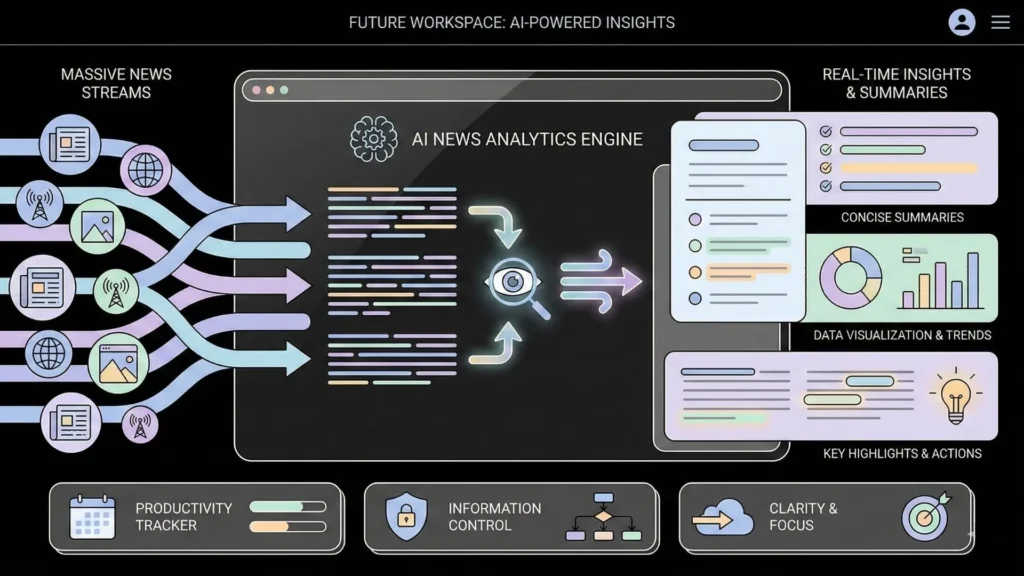

In our data-driven era, information overload is a major bottleneck for productivity. To stay ahead, you must Unlock the Power of AI to transform overwhelming feeds into actionable insights. This No-Code Tutorial guides you through a seamless process to Build Your Own News Summarizer, providing direct value by automating the extraction of “gold” from thousands of daily articles. By leveraging Imagine.bo’s SDE-level reasoning, you can move from a simple vision to a revenue-ready, high-performance application in days, bypassing traditional technical hurdles and reclaiming your most valuable asset: time.

Understanding the Power of AI-Driven News Summarization

Before we dive into the “how,” let’s look at the “why.” Automated news summarization isn’t just a convenience; it’s a competitive advantage.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildThe Benefits of Automation

In our experience, the most significant advantage of AI summarization is efficiency. Imagine a journalist or a market analyst who usually spends four hours a day reading. AI can condense those four hours into fifteen minutes of high-value reading. This frees up human talent for what they do best: deep analysis, creative strategy, and investigative reporting.

Objectivity and Accuracy

Humans are brilliant, but we are also biased. Even the best summarizers can accidentally leave out crucial facts or inject their own perspective. A well-trained AI model focuses purely on extracting the most salient information. It minimizes subjective interpretation, ensuring that the “essence” of the news remains intact. This is vital for maintaining credibility in news dissemination.

Democratizing Access

Lengthy, jargon-heavy articles can be a barrier to entry for many. By condensing complex topics into manageable summaries, AI makes information accessible to people with limited time or those who find effortless content curation challenging to navigate. It fosters a more informed society where everyone can participate in public discourse.

Why No-Code is the Perfect Starting Point

The traditional path to building an AI app used to look like this: learn Python, master Machine Learning (ML), understand Natural Language Processing (NLP), and manage complex cloud servers. For most founders, that’s a year of learning before even building a prototype.

Breaking the Technical Barrier

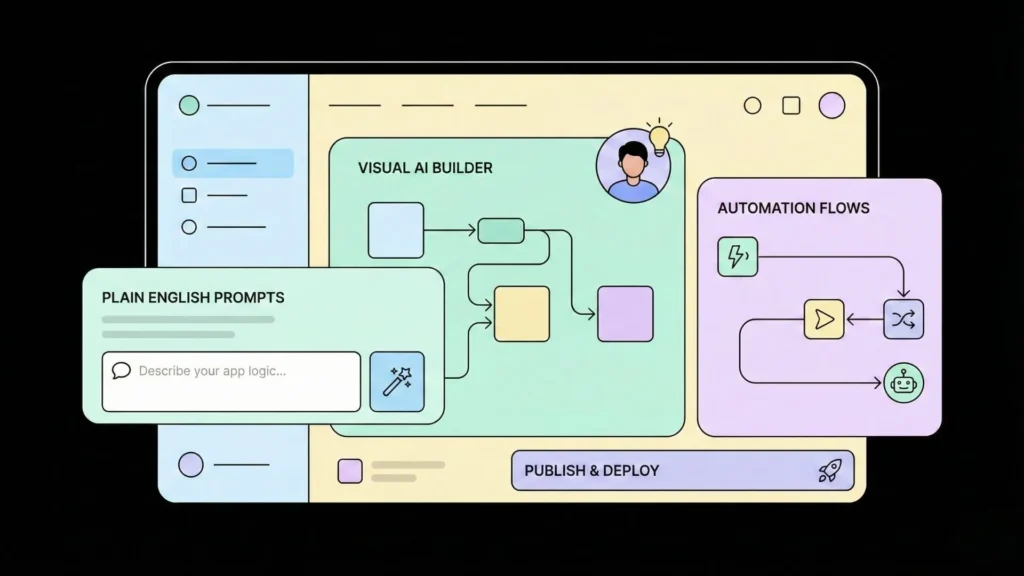

No-code platforms have changed the game. They abstract away the “scary” parts of development. Instead of wrestling with syntax and semicolons, you interact with intuitive interfaces.

However, there is a common misconception that “no-code” means “low power.” That couldn’t be further from the truth especially with a platform like Imagine.bo. While traditional no-code tools might just “glue” different apps together, Imagine.bo uses AI reasoning to build apps with SDE-level (Software Development Engineer) standards. This means the backend is clean, the architecture is scalable, and the product is ready for real-world traffic from day one.

Immediate Gratification and Iteration

The biggest enemy of a new project is lost momentum. When you can build a functional summarizer in a few hours rather than weeks, your enthusiasm stays high. You can test your idea, get feedback, and refine it instantly. This “speed to market” is why many entrepreneurs are now choosing AI-first, no-code workflows.

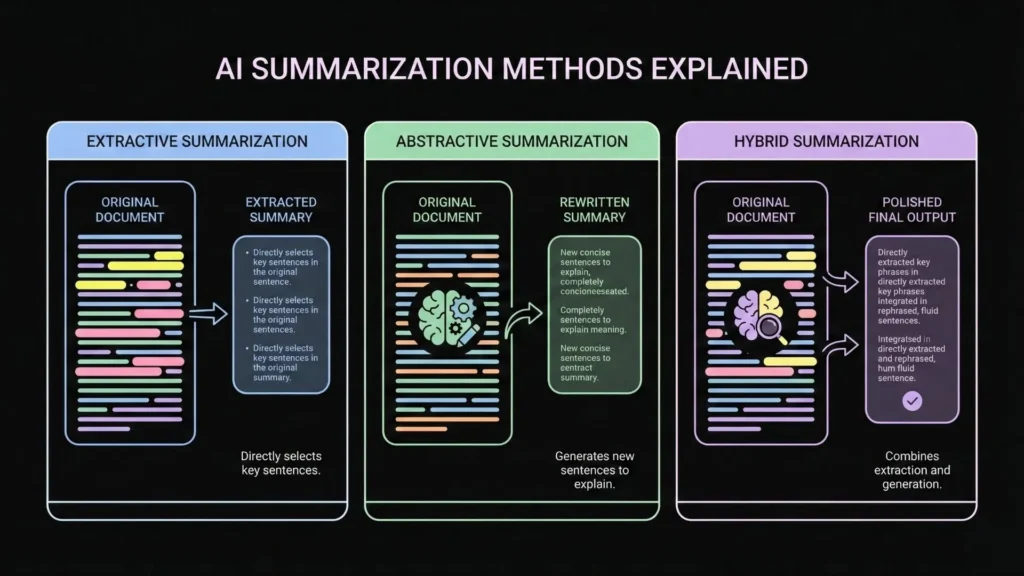

Exploring AI Summarization Techniques (The Simple Version)

To build a great tool, you need to understand the “brain” behind it. There are three main ways AI summarizes text:

A. Extractive Summarization

Think of this as a “highlighter.” The AI identifies the most important sentences already present in the text and pulls them out.

- Pros: It’s fast and factually grounded (it only uses words already there).

- Cons: It can feel a bit “choppy” because it lacks a flow between the pulled sentences.

B. Abstractive Summarization

This is more like a “human writer.” The AI reads the whole article, understands the context, and writes a completely new, concise summary in its own words.

- Pros: The summaries are fluent, professional, and easy to read.

- Cons: If the model isn’t high-quality, it might “hallucinate” (make up facts). This is why using a robust system is critical.

C. The Hybrid Approach

This is often the “sweet spot.” The system extracts the core facts first and then uses an abstractive model to polish and rephrase them. This ensures the summary is both accurate and engaging.

Choosing the Right Platform: Why Imagine.bo?

When you’re ready to build, you’ll find many tools. You could use simple automation tools to link an RSS feed to a text box, but if you want to build a real product something you could potentially turn into a business—you need a more professional foundation.

This is where Imagine.bo shines. Unlike simple drag-and-drop builders, Imagine.bo is an AI-driven App Builder that thinks like an engineer.

What Makes Imagine.bo Different?

- AI Reasoning Engine: You don’t just “drag a button.” You describe your product idea in plain English. The AI understands the business logic, the user flow, and the technical requirements.

- SDE-Level Architecture: Most no-code tools produce “spaghetti code” that breaks when you get 1,000 users. Imagine.bo generates clean, secure, and scalable code that follows industry best practices.

- End-to-End Ownership: It handles everything the frontend (what users see), the backend (the “brain”), the databases, and the deployment (hosting it on the web).

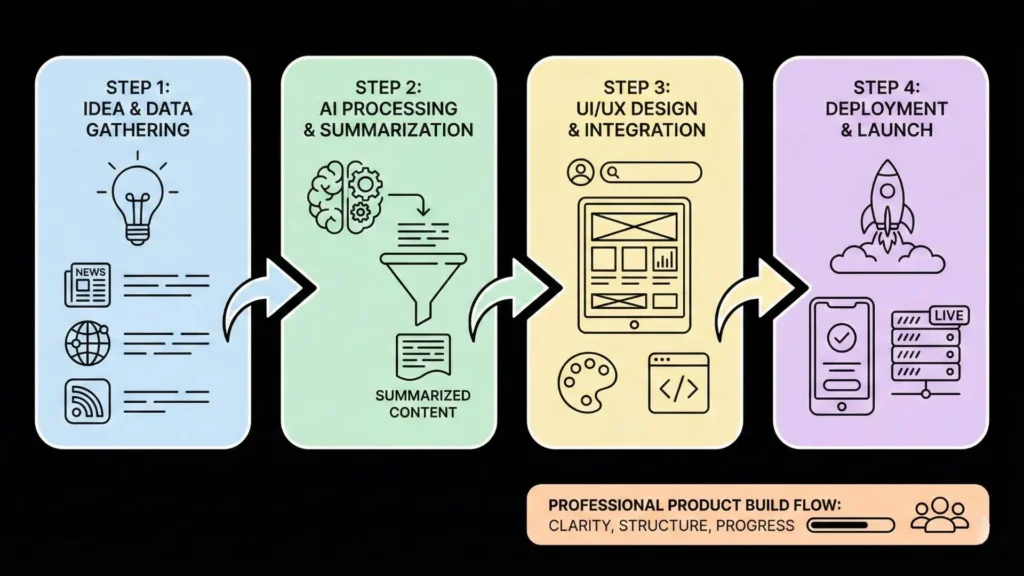

Step-by-Step Guide: Building Your AI News Summarizer

Ready to build? Let’s walk through the process using the professional workflow offered by Imagine.bo.

Step 1: Define Your Vision

Start by defining your vision and describing what your summarizer should do.

- Example: “I want to build a web app that pulls the top 5 tech news stories from TechCrunch every morning, summarizes them into 3 bullet points each, and sends a notification to my Slack channel.”

- The Imagine.bo Edge: The system takes this plain-English description and begins mapping out the data flows and tech stack for you.

Step 2: Connect Your Data Sources

Your summarizer needs “fuel” which is news data.

- RSS Feeds: Most blogs (like Verge or Wall Street Journal) have RSS feeds. They are simple to connect and provide structured updates.

- News APIs: For a more professional tool, you can connect to APIs like the Associated Press or Reuters.

- Imagine.bo Integration: The platform makes it easy to “plug in” these data sources without needing to write complex API authentication scripts.

Step 3: Configure the AI Summarization Logic

This is where you tell the AI how to behave.

- Do you want a 50-word summary or a 300-word deep dive?

- Do you want a “casual” tone or a “professional” one?

- Hyperparameter Tuning: In traditional coding, you’d adjust “learning rates.” In Imagine.bo, you use expert prompt engineering tips to refine how it condenses the news.

Step 4: Design and Build

Once the logic is set, the system generates the frontend and backend.

- You’ll get a clean, mobile-responsive interface.

- The backend will be cloud-native (ready for AWS or Google Cloud).

- Quality Assurance: The system performs automated checks to make sure everything works before you go live.

Step 5: Launch and Support

With one click, your app is live. But building is only the start.

- Continuous Growth: As your users grow, you can add new features just by describing them.

- Human + AI Support: If you ever need custom logic that’s highly specific, Imagine.bo offers human developer support to work alongside the AI.

Advanced Techniques to Make Your App Stand Out

If you want to move beyond a “basic” summarizer, consider these advanced features:

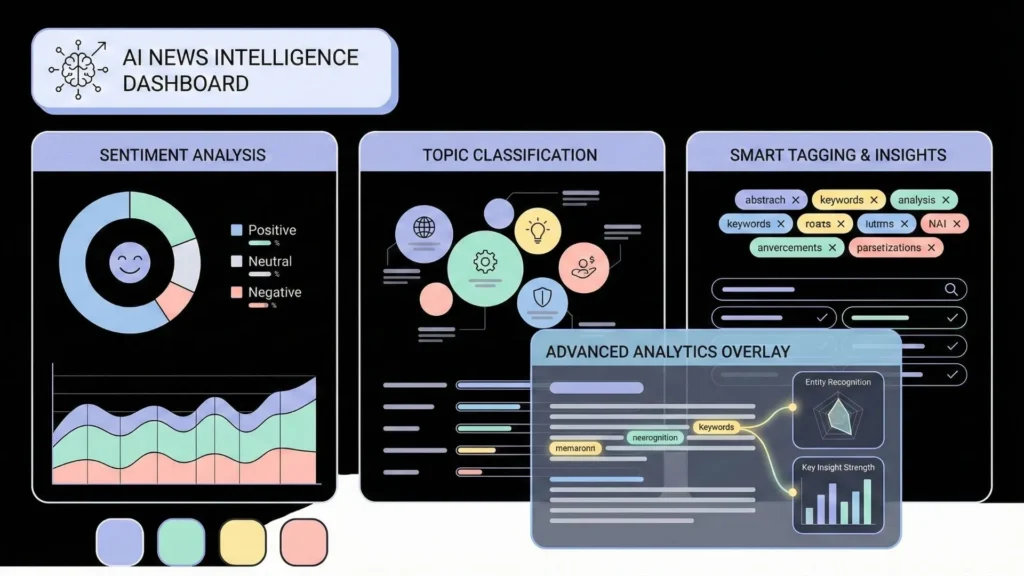

Sentiment Analysis

Don’t just summarize what happened; tell the user how it feels. Sentiment analysis can tag a story as “Positive,” “Negative,” or “Neutral.” This is incredibly useful for financial news where the “tone” of a report can move markets.

Topic Extraction

Use AI to automatically categorize news. Instead of just a list of stories, your app could have sections like “Economy,” “AI Breakthroughs,” or “Policy Changes” without you ever having to manually sort them.

Integration with Workflow Tools

A summarizer is most powerful where people already work. You can automate your social media and workflow effortlessly:

- Slack/Discord: Send daily digests to your team’s channel.

- Email Newsletters: Automatically generate a “Morning Briefing” email for your subscribers.

- Imagine.bo’s Webhooks: These allow your app to “talk” to thousands of other tools seamlessly.

Real-World Use Cases: Who is this for?

For Founders & Entrepreneurs

Validate your business ideas faster. If you want to create a niche news service or AI SaaS, you can build a revenue-ready MVP (Minimum Viable Product) in days. Because Imagine.bo is enterprise-grade, you don’t have to rebuild it when you get your first 10,000 users.

For Small Businesses

Stay ahead of your competitors. A side project can quickly become a startup as you track every mention of your clients across the web, providing daily “reputation reports” automatically.

For Researchers & Journalists

Cut your “reading time” by 40%. Journalists can use these tools to synthesize reports from multiple international sources simultaneously, ensuring they never miss a crucial angle on a breaking story.

Best Practices for Success

Accuracy is Key

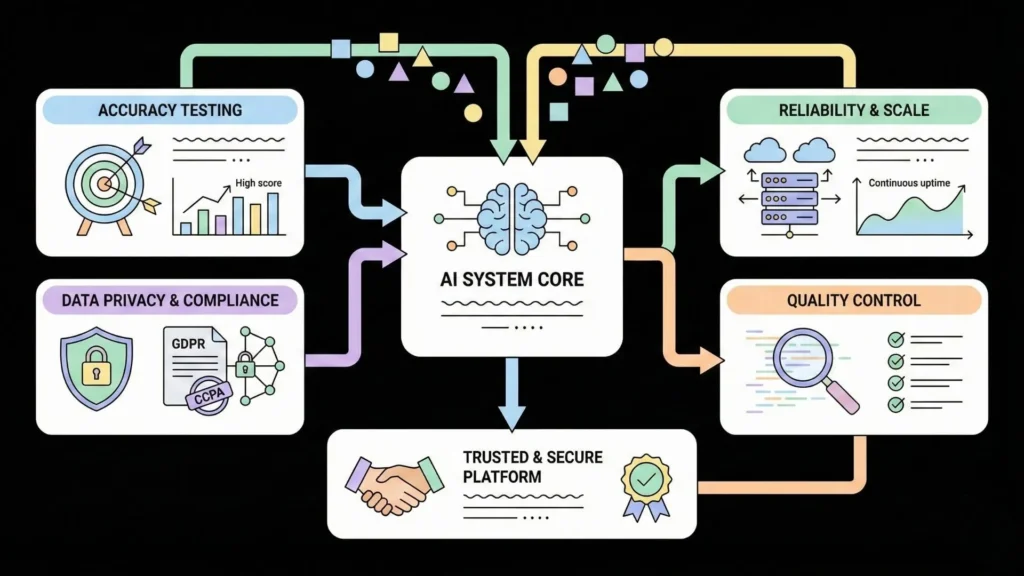

Always test your summarizer against the original text. Look for “hallucinations” and adjust your prompts. Remember, a summarizer that gives wrong information is worse than no summarizer at all.

Privacy and Security

If you are building an app for others, data privacy is non-negotiable. Ensure your platform is GDPR compliant. This is one of the core benefits of Imagine.bo it builds security into the architecture by default, protecting your users’ data.

Start Small, Scale Fast

Don’t try to summarize “the whole internet” on day one. Start with one niche (e.g., “Renewable Energy News”). Perfect the summaries for that topic, and then use Imagine.bo’s scalability to expand into other categories.

The Future: AI as Your Co-Developer

The evolution of no-code tools is moving toward a future where “ideas” are the only currency. We are entering an era where you don’t need to speak “code” to build software; you just need to speak “vision.”

Platforms like Imagine.bo are leading this charge. By combining the ease of no-code with the rigor of SDE-level engineering, they are empowering a new generation of “Citizen Developers.”

conclusion

Building an AI News Summarizer is the perfect project to start your AI journey. It’s practical, high-value, and demonstrates exactly how powerful modern AI can be.

Are you ready to stop consuming information and start building the tools that manage it?

Start your journey today. Describe your vision, and let the AI build the future for you.

On-Page SEO Summary for “AI News Summarizer Tutorial”

- Primary Keyword: Build Your Own News Summarizer (No-Code)

- Secondary Keywords: AI App Builder, Imagine.bo, No-Code Tutorial, Automated News Summarization, SDE-level Architecture.

- Meta Description: Learn how to build a professional, AI-powered news summarizer without coding. Discover the benefits of AI summarization and how Imagine.bo helps founders launch revenue-ready apps fast.

- Target Audience: Entrepreneurs, Founders, Tech-enthusiasts, Researchers.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build