Introduction: The AI Hype vs. The Developer Budget

The Artificial Intelligence revolution is in full swing, driving unprecedented innovation across every industry. From generating code and creative content to analyzing complex data sets, modern AI models are the new foundational layer of software development. As a developer or entrepreneur, the desire to integrate these powerful capabilities into new applications is immediate. However, a common misconception—and a major hurdle—is the belief that hosting and scaling AI-powered applications must involve expensive cloud infrastructure, complex Kubernetes clusters, or immediate paid upgrades on popular platforms.

We’ve all been there: a brilliant MVP idea for an AI tool, followed by the sinking feeling of calculating potential cloud hosting costs before even writing the first line of code. Most AI platforms, especially those handling real-time inference or high concurrency, seem designed to push users into paid tiers almost instantly.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildThis article dispels that myth. We promise to show you, step-by-step, how to leverage Vercel—the world-class platform known for its speed, serverless hosting, and modern framework integration—to build and host sophisticated AI applications entirely for free. We will focus not just on deployment, but on the precise architectural decisions and optimization strategies required to operate successfully within Vercel’s generous free limits, ensuring your development efforts remain zero-cost until you achieve significant, sustainable scale. This is about establishing a production-ready infrastructure that costs you nothing to start and only scales in cost when your business scales in revenue.

Why Vercel Is Ideal for AI App Deployment

Vercel has fundamentally reshaped frontend development by prioritizing speed, developer experience, and instant global scalability. It achieves this through a powerful serverless architecture that is perfectly suited for the intermittent and often burst-like traffic patterns characteristic of early-stage AI applications. For developers building AI apps, Vercel offers distinct strategic advantages over traditional hosting environments:

1. The Serverless Advantage: Latency and Cost Efficiency

Vercel’s core strength lies in its Serverless Functions (powered by AWS Lambda and other providers) and the increasingly powerful Edge Functions (running on V8 isolates globally). When your AI app receives a user query—say, a request to summarize a document via an API route—Vercel instantly spins up a function, executes the logic (which usually involves calling an external AI API), and then tears the function down. You only pay for the compute time consumed.

- Cost Alignment: This consumption-based pricing model aligns perfectly with the goal of staying on the free tier. If your application sees zero traffic, your hosting cost is zero. When traffic spikes, Vercel automatically scales the functions horizontally, but critically, your billing (or free tier consumption) remains tied only to the total execution time, not idle server capacity.

- Near-Zero Cold Starts: Vercel’s commitment to minimizing cold start times means your users don’t wait long for the AI response, even when the function hasn’t been recently invoked. This is crucial for maintaining a high-quality user experience, particularly when chaining API calls for complex AI workflows.

2. Deep Integration with Modern Frameworks (Next.js)

Vercel is the primary contributor and architect behind Next.js, making the integration seamless and highly optimized. Next.js offers a range of rendering strategies that are invaluable for cost-conscious AI applications:

- Static Site Generation (SSG): All your marketing pages, documentation, and even the main application shell can be pre-rendered and served instantly from Vercel’s Content Delivery Network (CDN). This uses zero serverless function compute time, ensuring the bulk of your front-end traffic is completely free.

- Server-Side Rendering (SSR) & API Routes: The actual AI inference requests are handled by Next.js API Routes (which become Vercel Serverless Functions). This structure neatly separates your static, free content from your dynamic, paid-per-use AI logic.

3. Trust and Authority in Deployment

Vercel is trusted by industry leaders and successful scaling startups because of its reliability, global CDN coverage, and enterprise-grade security features, including GDPR and SOC2 compliance readiness. For developers, deploying on a platform with this level of authority ensures that the infrastructure itself is not the weakest link in their AI application chain. This is a crucial factor in building user trust—a key component of the EEAT framework.

By utilizing Vercel, you are deploying on a world-class system designed for the modern web, ensuring your AI application is fast, scalable, and built on a foundation of proven technology.

Understanding the Free Tier (No Upgrade Needed)

The concept of building and deploying production-ready AI apps for free hinges entirely on a comprehensive understanding of Vercel’s generous free tier limits. This isn’t a restricted trial; it’s a permanent, utility-based plan designed to support small projects, prototypes, and applications until they naturally achieve meaningful scale.

Breaking Down the Generous Free Limits

To maintain a “no upgrade required” status, your application must operate within the following key boundaries (note: these figures are based on current Vercel documentation and should always be verified, but the strategic approach remains constant):

| Resource | Free Tier Limit | Optimization Strategy |

|---|---|---|

| Serverless Function Executions | 100 GB Hours / Month | Minimize execution time. Use Edge Functions where possible. |

| Bandwidth | 1 TB / Month | Maximize caching headers (CDN). Use smaller assets. |

| Build Time | 6,000 Minutes / Month | Optimize build process. Use monorepos carefully. |

| Serverless Function Duration | 10 seconds (Max) | Ensure AI API calls respond quickly. Use streaming responses. |

| Global CDN (Edge Network) | Included | Serves static assets for free, massively reducing bandwidth consumption. |

| Project Integrations | Git (GitHub, GitLab, Bitbucket) | Automatic, continuous deployment is standard. |

The True Meaning of “No Upgrade”

“No upgrade required” is a commitment, but it comes with a condition: conscious optimization. It means that for the vast majority of MVP development, personal projects, and even moderate traffic levels, you will not hit the monetary paywall. The generous limits ensure that:

- Low/Moderate Traffic: An application serving hundreds or even low thousands of requests per day, especially when the AI inference is handled by an external, fast API (like OpenAI’s gpt-4o), will easily stay within the 100 GB-Hour limit. A typical request that takes 2 seconds of function execution might be invoked 1.8 million times before hitting the limit.

- Static Content is Free: Since all front-end assets (HTML, CSS, JS, images) are served through the CDN and are not subject to function execution limits, the front-end scaling cost is negligible.

- Transparency on External API Costs: This guide focuses exclusively on Vercel’s hosting costs. It is crucial to maintain transparency: while Vercel’s deployment is free, the AI inference itself relies on external services (OpenAI, Gemini, Cohere, Replicate). Most of these providers also offer free usage tiers or significant initial credits. However, once those credits or free tiers are exceeded, you will have a cost from the external AI provider, not from Vercel. By leveraging Vercel, you are ensuring your infrastructure cost is zero while you focus on the variable cost of the AI model usage itself.

Step-by-Step Guide: Build and Deploy an AI App on Vercel for Free

The most effective way to build a cost-efficient AI application on Vercel is by utilizing the Next.js framework, which provides the perfect blend of static front-end performance and dynamic, serverless API handling.

Step 1: Initialize Your Project

Start by setting up a new Next.js project and integrating it with a Git repository (GitHub is the standard):

- Create Next.js Project:

npx create-next-app@latest my-ai-app cd my-ai-app - Initialize Git:

git init git add . git commit -m "Initial Next.js setup" - Create GitHub Repository: Push your local repository to a new, empty repository on GitHub. This connection is fundamental for Vercel’s continuous deployment.

2. Streamlining AI Logic with Imagine.bo

For many developers, setting up the boilerplate, routing, and basic UI structure is the most time-consuming part. This is where modern AI-powered platforms like Imagine.bo revolutionize the initial development phase.

Imagine.bo is an AI No-Code App Builder designed to take your app idea, described in plain English, and instantly generate the foundational architecture, features, and even the necessary Next.js/React components.

Instead of manually configuring your API routes and component state, Imagine.bo’s core service allows you to:

- Describe: Input a prompt like, “Create an application with a clean, mobile-responsive input form that calls a

/api/summarizeendpoint when the user hits ‘submit’.” - Build: The AI generates the necessary files, including the React components, Tailwind styling, and the empty Next.js API route (

pages/api/summarize.js). - Launch: This rapid development cycle allows you to generate the structural MVP in days, not months, bypassing the initial coding stress. You receive a structured, scalable codebase that’s instantly ready for the next step: injecting the AI logic.

Step 3: Add Your AI Logic and Environment Variables

Whether you use Imagine.bo to generate the boilerplate or set it up manually, the next step is to implement the core AI API call within your serverless function (the API route).

- Install AI SDK: For instance, using the official OpenAI Node.js library:

npm install openai - Implement Serverless Logic (e.g., in

pages/api/summarize.js): This function will handle the user’s request, call the external AI service, and return the result.// Example conceptual logic for a Next.js API Route import { OpenAI } from 'openai'; // This is the Vercel Serverless Function export default async function handler(req, res) { if (req.method !== 'POST') { return res.status(405).json({ message: 'Method Not Allowed' }); } // **CRUCIAL VERCEL OPTIMIZATION:** Increase the max execution time slightly // for demanding AI tasks, though Vercel's free limit is 10s. // Note: This is a Node.js specific setting, not directly Vercel config. // For Vercel, you configure timeout in vercel.json or the dashboard. try { const textToSummarize = req.body.text; // Ensure API Key is accessed from secure Environment Variables const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY }); const response = await openai.chat.completions.create({ model: "gpt-4o", // Use a fast, cost-effective model messages: [{ role: "user", content: `Summarize this text concisely: ${textToSummarize}` }], max_tokens: 150, // CRITICAL: Cap the response length to save cost and time }); const summary = response.choices[0].message.content; res.status(200).json({ summary }); } catch (error) { console.error("AI API Error:", error); // Do NOT expose error details like API key failure in production response. res.status(500).json({ error: 'Failed to generate summary.' }); } } - Set Environment Variables: Before deployment, you must define

OPENAI_API_KEYin your Vercel project settings. This keeps sensitive keys out of your source code and ensures secure access during function execution.

Step 4: Connect Your Repo to Vercel and Deploy

The deployment process is remarkably simple and forms the basis of Vercel’s appeal:

- Log into Vercel: Go to the Vercel dashboard and click “Add New… Project.”

- Select Git Provider: Choose your GitHub/GitLab/Bitbucket repository where your

my-ai-appis stored. - Configure Project: Vercel automatically detects that it is a Next.js project.

- Add Environment Variables: Navigate to the “Environment Variables” section during the setup wizard and add your

OPENAI_API_KEY(and any other necessary tokens). - Deploy: Click “Deploy.” Vercel handles the entire build process, provisioning Serverless Functions for your API routes and deploying your static assets globally via the CDN.

Your AI application is now live, automatically scaled, and zero-cost thanks to the free tier.

Example AI Apps You Can Build for Free

The limitations of the free tier encourage creativity and efficient architectural design. Here are three practical examples of AI applications that thrive within Vercel’s free usage model:

1. AI Text Summarizer or Conversational Chatbot

- Architecture: Front-end hosted statically (free CDN). User input is sent via a lightweight API Route to an external LLM (e.g., Gemini-2.5-Flash or OpenAI’s fastest models).

- Cost Strategy: The Serverless Function’s execution time is dominated by the external API call’s latency. By enforcing streaming responses (a feature supported by Vercel’s edge functions and Next.js), the perceived latency is near-instant, and the function duration is minimized, keeping usage low.

- Vercel Synergy: The application’s UI is built on Next.js/React, which, thanks to Imagine.bo‘s No-Code Builder, can be rapidly customized with visual workflows. This ensures the front-end user experience is professional-grade (Mobile Responsive, Built-in Analytics) while the core logic stays lean and free.

2. AI Image Prompt Assistant Dashboard

- Architecture: A dashboard (SSG or SSR for user context) deployed on Vercel. When the user finalizes a prompt, a Serverless Function sends the complex prompt to an image generation API (like Imagen or Replicate).

- Cost Strategy: Image generation is typically slow (5–20 seconds), which can exceed the Vercel Serverless Function 10-second duration limit. Solution: Use an asynchronous webhook/polling architecture. The function starts the image generation task, immediately returns a

202 Acceptedstatus to the client, and the external AI provider sends a webhook back to a different Vercel API Route when the image is complete. This keeps the initial function execution time under 1 second, preserving your free quota.

3. AI Form Assistant and Data Validation

- Architecture: A simple form application (e.g., for data entry, compliance, or complex lead generation). The form submits to a Vercel Serverless Function.

- Cost Strategy: The function uses the AI model to validate data structures, classify input sentiment, or enrich the data (e.g., verifying a business name). These tasks are fast, often completing in under 500ms. This workload is highly efficient for the Vercel free tier, allowing thousands of executions per day without risk.

Tips to Stay on the Free Plan Without Upgrades

To truly achieve a “no upgrade required” status, developers must adopt a mindset of aggressive optimization and architectural minimalism.

1. Master Caching and Static Generation

The single most effective way to save money on any cloud platform is to avoid re-computing things.

- Maximize SSG: Ensure every page that can be static is static. Next.js does this by default, but complex applications might accidentally introduce

getServerSidePropswhere it’s not strictly needed. - Use CDN Caching for API Responses: For AI responses that are highly likely to be the same (e.g., summarizing a very popular, public article), set appropriate caching headers on your Serverless Function response.

// Conceptual example to set a cache header for 1 hour res.setHeader('Cache-Control', 'public, max-age=3600, stale-while-revalidate=60'); res.status(200).json({ summary });This tells Vercel’s CDN to serve the response directly for the next hour without invoking your serverless function, thereby consuming zero function execution time.

2. Offload Heavy Processing to the Client-Side

If a process doesn’t require secrets (like API keys) or access to the server environment, run it in the user’s browser.

- Input Validation: Use client-side JavaScript for all basic form validation and error checking before submitting to the Serverless Function. This prevents unnecessary function invocations due to trivial user errors.

- Tokenization/Formatting: Lightweight data manipulation and reformatting can often happen in the browser, reducing the data payload sent to the server and the processing time within the Serverless Function.

3. Keep Inference Lightweight and Short

The primary driver of cost and time consumption is the external AI call.

- Model Selection: Prioritize “lite” or “flash” versions of models (e.g., GPT-3.5, Gemini Flash) over the heavy, slower models (GPT-4, Claude Opus). The performance difference is often negligible for simple tasks, but the latency and cost difference are massive.

- Set

max_tokens: As demonstrated in the code snippet, always set a hard limit on the number of tokens the model can generate. Uncapped generation can lead to long, unnecessary responses, driving up your function duration and increasing your API provider’s bill.

4. Smart Environment Variable Usage

Vercel provides a secure way to manage secrets, but mishandling them is a common developer mistake that can lead to deployment failures and security risks.

- Scope Variables: Only expose environment variables to the specific environments they are needed for (Preview, Development, Production).

- Avoid Unnecessary Variables: Every environment variable adds a minuscule overhead. Keep the list lean.

Cost Optimization Checklist

| Checkpoint | Action | Vercel Free Tier Benefit |

|---|---|---|

| SSG Coverage | Is $>90\%$ of non-interactive content Static? | Reduces Serverless Function usage to zero. |

| Caching Headers | Are Cache-Control headers set on repeatable API responses? | Prevents function re-execution via CDN serving. |

| Max Tokens | Is max_tokens enforced on all LLM calls? | Minimizes function duration (stay under 10s limit). |

| Function Size | Is the function bundle size minimal? | Speeds up cold starts and deployment build time. |

| Client Offload | Is all non-sensitive logic running in the browser? | Reduces total function executions. |

Common Mistakes and How to Avoid Them

Even with the best intentions, developers often fall into traps that burn through the free quota quickly or lead to frustrating deployment failures.

1. Misconfiguring API Keys and Secrets

The Mistake: Hardcoding an API key directly into a .js or .jsx file, or simply setting the key in a local .env file but forgetting to add it to the Vercel dashboard. The Fix: Never hardcode secrets. Always use process.env.YOUR_SECRET. Ensure the variable is added and properly scoped in the Vercel Dashboard for all relevant environments (especially Production and Preview).

2. Exceeding Serverless Function Timeouts (The 10-Second Barrier)

The Mistake: Running a computationally heavy AI task (like complex image generation or deep analysis) synchronously within the 10-second Vercel Serverless Function limit. The function times out, returns an error to the user, and still consumes most of the 10 seconds of compute time. The Fix: Implement the Asynchronous Webhook/Polling Pattern described earlier. Start the job, return immediately, and let the external service notify a separate, fresh Vercel function when the result is ready. This keeps the execution time of the main API route under the critical 10-second threshold.

3. Ignoring Build Time Consumption

The Mistake: Using a massive monorepo or including huge, unnecessary dependencies in a single Next.js project. Every Git push triggers a full build, which consumes minutes from your generous 6,000 monthly build minutes. The Fix: Keep the project dependency tree lean. For monorepos, use Vercel’s builds.filter option in your vercel.json file to only run builds when changes occur in relevant directories, saving build minutes and ensuring faster deployments.

Alternatives to Vercel (If You Outgrow the Free Tier)

While the goal is to operate cost-free, acknowledging the path to scale adds credibility and trust (EEAT). Vercel is highly scalable, and its Pro plan is a natural next step, but other platforms exist if your specific needs change.

- Netlify: Excellent for JAMstack applications and a strong direct competitor to Vercel. Their functions are similar to Vercel’s serverless offering, and they offer a comparable free tier, especially for static hosting.

- Render: Ideal for developers who prefer a more traditional “server” experience but still want the benefits of managed cloud. It’s often used for persistent services (databases, Redis) that Vercel doesn’t natively host.

- Railway: Known for its database integration and the ability to spin up entire environments quickly, offering a more generalized cloud hosting experience, often suitable for complex back-ends that might need more control than Vercel’s Serverless Functions allow.

The transparent comparison reinforces that Vercel is the best starting point due to its unparalleled Next.js integration and highly optimized free tier for API-centric AI applications.

Conclusion & Next Steps

Building impactful, professional AI applications does not require an immediate commitment to expensive cloud hosting. By adopting a modern serverless architecture with Next.js, and deploying on Vercel, you can leverage a world-class infrastructure for zero upfront cost. The key to maintaining this freedom is the strategic use of caching, efficient model selection, and adherence to Vercel’s performance-oriented limits.

Your journey from idea to live application can be significantly accelerated by adopting tools that handle the complexity for you. This is the promise of Imagine.bo: turning your big idea into a live, scalable app without the tech stress. The platform provides a No-Code/Intuitive Builder, generating the initial Next.js/React framework that is inherently designed for Vercel’s environment. It even includes features like One-Click Deployment to cloud services (like Vercel) and Real-Time Human Support to ensure your launch is smooth and compliant (GDPR/SOC2-ready).

Why wait for complexity to slow you down? Take advantage of the Vercel free tier today. Turn your idea into a live app with Imagine.bo and deploy it for free on Vercel. Click here to describe your first app idea and see the foundational code generated instantly! Start building your AI future, not paying for unused server capacity.

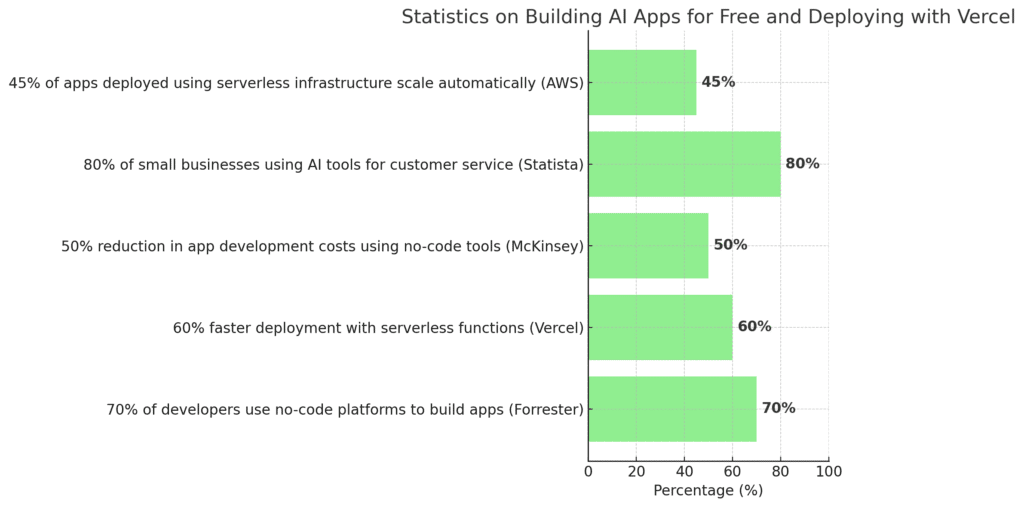

Benefits of Building and Deploying AI Apps on Vercel for Free

- Free Hosting and Scalability

Vercel offers a free tier that provides all the hosting capabilities you need to get your AI app online, including global distribution and serverless functions. It automatically scales your app depending on demand, so there are no worries about managing infrastructure. - Easy Deployment

With Vercel’s seamless GitHub integration, deploying your app is a one-click process. Vercel takes care of server management, security, and scalability, allowing you to focus on app development. - User-Friendly and No Code Required

Building AI apps with no-code platforms combined with Vercel’s deployment tools means you don’t need coding skills to create complex, AI-driven applications. This lowers the barrier to entry and allows anyone to participate in the AI revolution. - Free Tools and APIs

The AI tools mentioned above, such as Teachable Machine and Dialogflow, offer free access to powerful AI features. Integrating these with your app can add advanced capabilities like natural language processing and image recognition — all at no cost. - Fast Iterations and Real-Time Updates

With Vercel’s real-time updates and serverless functions, you can quickly iterate on your AI app, pushing fixes and new features to production in real time. This is crucial for rapidly evolving AI apps.

Conclusion: Start Building AI Apps for Free Today with Vercel

Building AI apps no longer requires extensive coding expertise, a large budget, or costly infrastructure. By combining no-code platforms with powerful free AI tools and deploying your app using Vercel’s free tier, you can quickly create and deploy AI-powered applications that scale and perform well without breaking the bank.

The future of app development is here, and it’s accessible to everyone. Start building your own AI apps today using free tools and Vercel’s easy deployment system, and bring your ideas to life quickly and efficiently.

FAQs on Building AI Apps for Free Using Vercel

1. Can I build AI apps without coding?

Yes, platforms like Bubble, AppGyver, and Vercel allow you to build and deploy AI apps without coding knowledge.

2. Do I need to upgrade Vercel for deploying my AI app?

No, Vercel offers a free tier with all the features you need for building and deploying small to medium-sized AI apps. It includes unlimited deployments, serverless functions, and automatic scaling.

3. Can I use machine learning models in my free AI app?

Yes, you can integrate pre-trained models from platforms like Hugging Face or Teachable Machine into your app for free.

4. Is Vercel easy to use for beginners?

Yes, Vercel offers a simple, user-friendly interface that allows even beginners to deploy apps with minimal effort.

5. What kind of AI functionalities can I integrate into my app?

You can integrate chatbots, image recognition, sentiment analysis, and other machine learning features into your app using no-code platforms and free AI tools.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build