The “metaverse” might have been a buzzword that cooled off in the headlines, but the technology behind it Augmented Reality (AR) and Virtual Reality (VR) has been quietly undergoing a massive, AI-fueled transformation.

Until recently, if you wanted to build a digital world, you needed a PhD in C++, a small army of 3D modelers, and a budget that could rival a mid-sized film production. But the gatekeepers are being sidelined. We are entering the era of No-Code Immersive Creation, where the distance between a “thought” and a “rendered world” is shrinking to almost zero. In fact, many creators are finding that they can build an app by describing it rather than writing complex scripts.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildIn this guide, we’ll explore how Artificial Intelligence is acting as the “operating system” for the next generation of digital experiences, and how you regardless of your technical background can start building today.

Understanding the Synergy: When AI Meets AR/VR

For a long time, AI and AR/VR were treated like two separate tracks in the tech world. AI was about “brains” (data, logic, processing), while AR/VR was about “body” (visuals, hardware, presence). Today, that distinction has vanished. AI is now the core engine that makes immersion possible.

The Shift from Manual to Generative

In traditional development, if you wanted a forest in your VR game, an artist had to manually place every tree, rock, and blade of grass. If you wanted a character to talk to you, a programmer had to write “If/Then” statements for every possible interaction. This evolution is part of a broader shift where no-code AI development is becoming the standard for modern software creation.

AI changes the game through:

- Procedural Content Generation (PCG): You give the AI a set of rules (e.g., “create a medieval village in a rainy climate”), and it generates the entire environment dynamically. This means worlds can be infinite and varied without increasing the artist’s workload.

- Intelligent Behaviors: Instead of following a script, virtual characters (NPCs) use Large Language Models (LLMs) to have real, unscripted conversations with users. They remember past interactions and react with emotional intelligence.

- Real-time Adaptation: AI monitors how you move and react, adjusting the difficulty of a simulation or the lighting of a room to match your personal preferences in milliseconds.

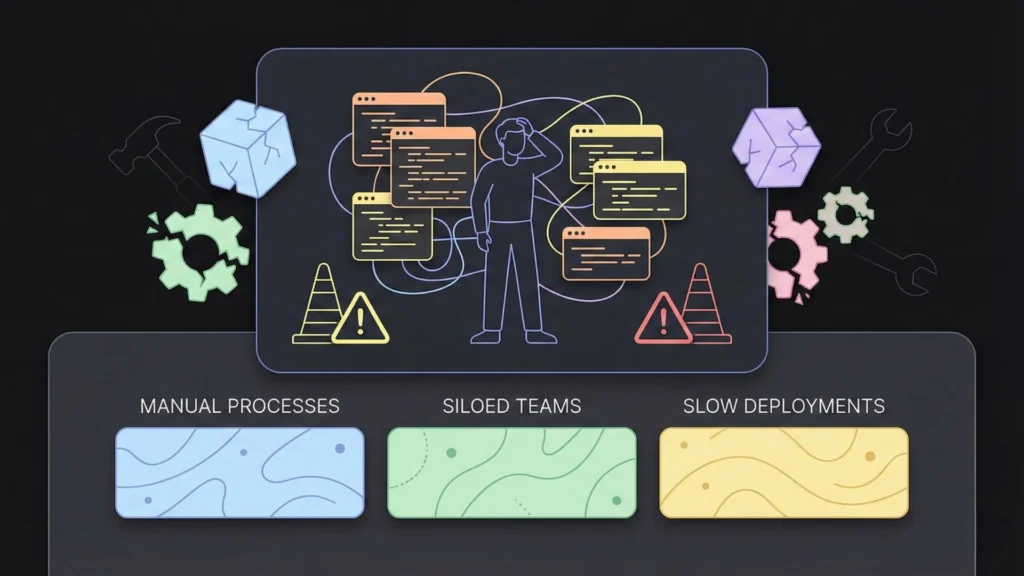

Why Traditional Development Was Broken

If AR and VR are so great, why isn’t every business using them yet? To put it bluntly: It was too hard. ### The Barrier Trio: Cost, Time, and Talent

- Technical Debt: Coding for spatial computing is notoriously difficult. Handling “6 Degrees of Freedom” (6DoF) and ensuring that digital objects stay “pinned” to the real world requires complex math and physics.

- Asset Bottlenecks: Creating a single high-quality 3D model could take a professional artist days. A full world? Months. This bottleneck often led to “placeholder” art that broke immersion.

- Iteration Friction: In traditional dev, making a small change often meant recompiling the entire project and waiting for long render times. This killed the creative flow and made experimentation prohibitively expensive.

This “barrier to entry” meant that only AAA gaming studios or Fortune 500 companies could afford to play in this space. Founders with brilliant ideas for education, therapy, or retail were often left on the sidelines because they couldn’t afford a $200,000 prototype. Fortunately, there are now best no-code tools to launch your startup fast that remove these financial and technical hurdles.

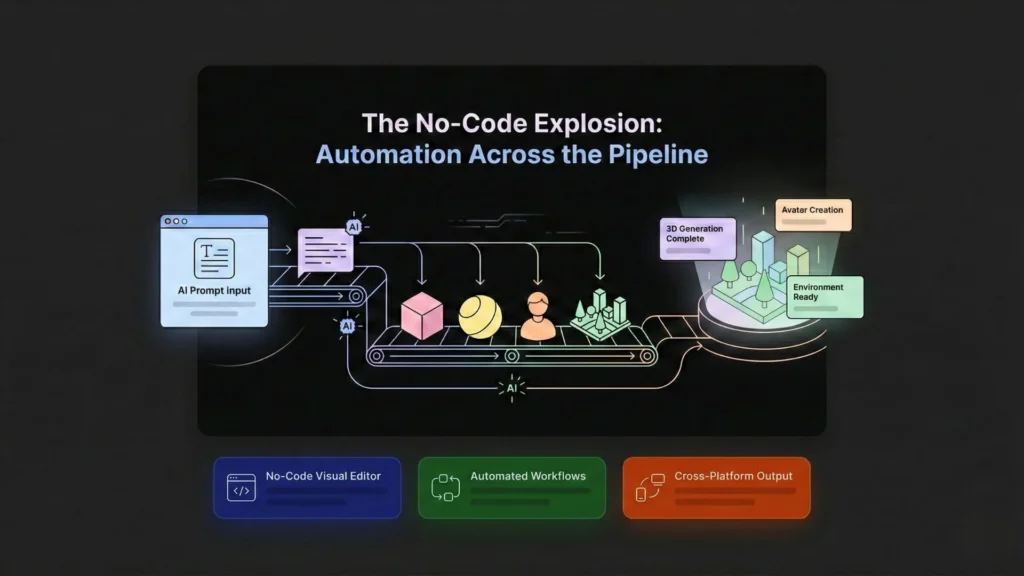

The No-Code Explosion: Automation Across the Pipeline

We are currently witnessing the “Canva-fication” of 3D worlds. AI-powered no-code platforms are automating the most tedious parts of the development pipeline.

Where AI is Doing the Heavy Lifting:

- 3D Modeling and Texturing: AI can now take a simple text prompt and generate a 3D mesh. It can also “hallucinate” high-resolution textures, turning a flat box into a realistic wooden crate with rusted iron hinges.

- Rigging and Animation: AI can now take a video of a human moving and retarget that motion onto a 3D avatar instantly no expensive motion-capture suits required. This allows for lifelike movement in even the smallest indie projects.

- Spatial Mapping and Occlusion: AI helps devices understand their surroundings better. It enables “occlusion,” which is the ability for a digital dragon to disappear behind your real-world sofa rather than looking like it’s floating through it.

This trend is empowering a new generation of creative entrepreneurs building with AI who are launching products that were once deemed impossible without a technical co-founder. If you have a vision for a spatial app, you can start building your immersive project for free right now using simple conversational prompts.

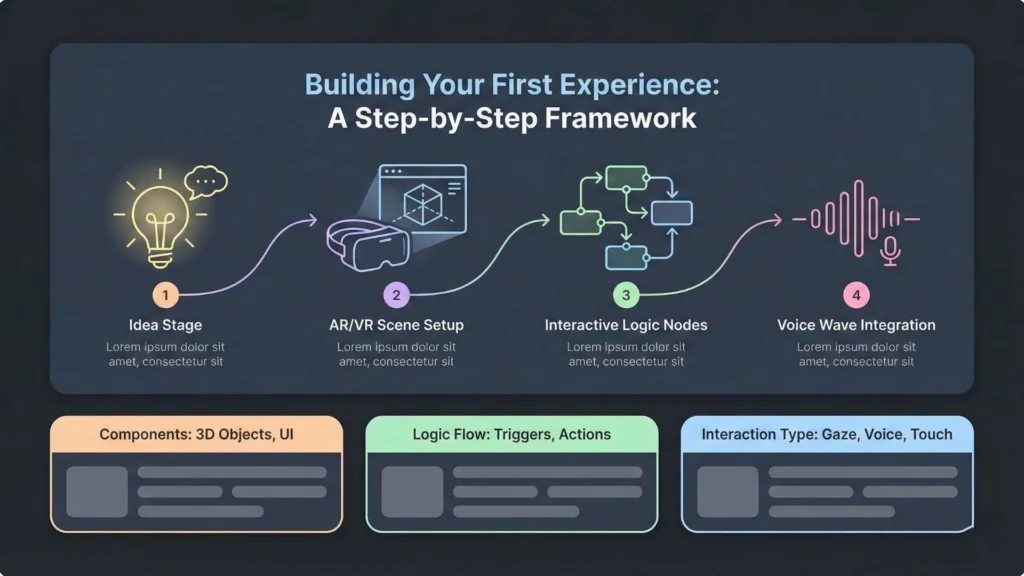

Building Your First Experience: A Step-by-Step Framework

You don’t need to be a developer to launch an AR/VR product, but you do need to be a system designer. Here is how you should approach your build:

Step 1: Define the “Value of Presence”

Presence is the psychological feeling of actually being somewhere. Ask yourself: Why does this need to be in AR or VR?

- Augmented Reality (AR): Best for utility and enhancing the physical world. Think of an app that overlays historical data on a modern city street or a retail app that lets you preview furniture in your home.

- Virtual Reality (VR): Best for total focus and emotional immersion. This is ideal for high-stakes training (like surgery), gaming, or virtual tourism where you want the user to forget their physical surroundings.

Step 2: AI-Driven Asset Sourcing

Instead of hiring a 3D modeler, use best AI image generators to visualize your concepts and create textures for your environments. You can prompt an AI to create a “360-degree panoramic skybox of a Martian colony,” which instantly provides the background for your VR scene.

Step 3: Layer in the “Brain”

Use no-code logic builders (visual scripting) to define what happens when a user touches an object. These tools use “nodes” and “wires” to create logic flows.

- User grabs the key $\rightarrow$ Key disappears $\rightarrow$ Inventory $+1 \rightarrow$ Door unlocks.

Step 4: Add Natural Language

Integrate a voice-AI layer. Allow your users to ask the virtual environment questions. In a training simulation, a student should be able to ask, “How do I fix this valve?” and receive a real-time, AI-generated verbal response that guides them through the process.

Real-World Success Stories

This isn’t just theoretical. No-code AR/VR is already making money and changing lives. From local businesses to global brands, people are finding that they can launch an app without developers by leveraging these intelligent platforms.

Case Study: The Interactive Museum

A local museum wanted to bring its dinosaur exhibit to life but lacked the $50k developer fee. Using a no-code AR platform, they created “Scan-to-Life” stickers. When a child scans a fossil with their phone, an AI-generated 3D T-Rex appears on top of the bones, roaring and moving based on the child’s proximity.

Case Study: Retail Virtual Try-On

Small eyewear brands are now using AR to compete with giants like Warby Parker. By integrating a “Face-Track” AI module, they allow customers to see exactly how frames sit on their faces. This reduces return rates by providing a “try before you buy” experience in a browser window.

Case Study: Safe-Scale Healthcare Training

Hospitals are using VR to train staff on emergency protocols. Instead of expensive physical drills, nurses wear headsets to enter a “Digital Twin” of their actual hospital ward. The AI simulates a chaotic environment with varying patient needs, providing a safe space to fail and learn.

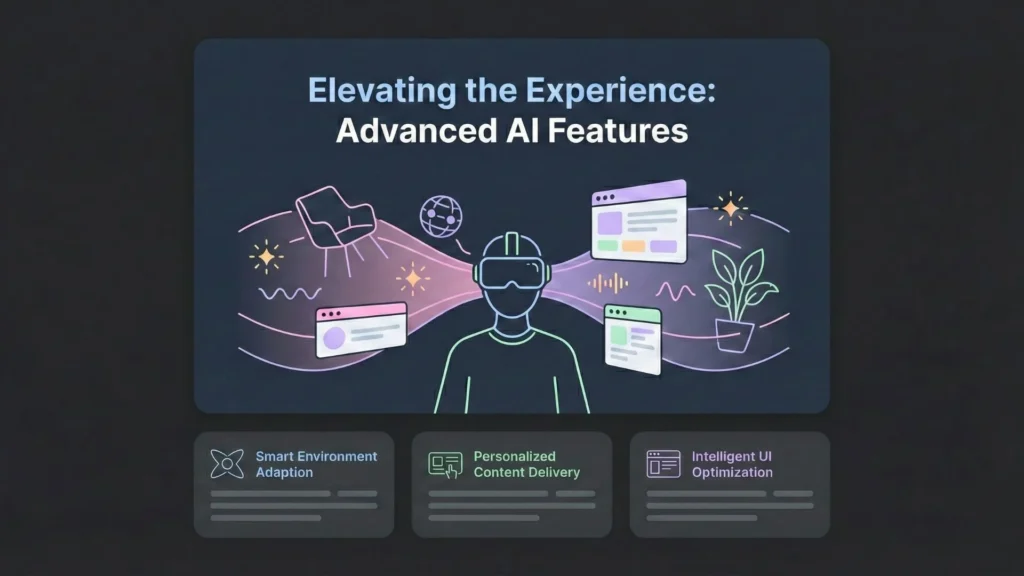

Elevating the Experience: Advanced AI Features

To make your world feel truly “next-gen,” you should look at specific AI integrations that push the boundaries of user interaction.

Real-Time Object Recognition

Object recognition allows AR experiences to “see.” Imagine an AR app for electricians. The AI identifies a specific circuit breaker model through the camera lens and overlays the exact safety instructions onto the physical equipment. This is the “Iron Man” HUD (Heads-Up Display) brought to life. Such complexity can now be managed by following an ai mobile app development guide that focuses on spatial features.

Adaptive Environments and Personalization

Machine learning allows the world to learn from the user. If a user in a VR meditation app has a high heart rate (detected via smartwatch integration), the AI can automatically dim the virtual lights, slow the background music, and change the visual setting from a bright beach to a calm forest.

The Death of the Traditional Menu

Natural Language Processing (NLP) is replacing buttons. Instead of fumbling with controllers to find a “Settings” menu, users simply talk to the world. “Make it sunset,” or “Show me the internal engine components.” This makes technology invisible and the experience more human.

The Future: Sensory Integration and Haptics

Where are we going? The next frontier is Sensory AI. We are moving beyond just sight and sound to engage the full human sensory suite.

- Haptics and Physical Feedback: AI-driven haptic vests and gloves use high-frequency vibrations to simulate the “feel” of different textures, the kickback of a tool, or even the sensation of rain on your skin.

- Smell and Taste Simulation: While still in its infancy, “digital scent” technology uses AI to mix base chemicals to create specific odors, adding a profound new layer to virtual cooking or travel experiences.

- Ethical and Responsible Design: As immersion deepens, the industry is shifting focus toward protecting users. This means building guardrails around data privacy (tracking gaze patterns) and ensuring that virtual environments are inclusive and free from algorithmic bias.

For those looking to stay ahead of the curve, it is essential to understand the future of app development as it moves toward deep sensory integration and total digital immersion.

Bridging the Gap with Imagine.bo

While there are many tools that help you build a “cool demo,” the real challenge for founders is turning that demo into a business. This is where Imagine.bo enters the conversation, offering a path for non-technical founders building products to succeed in a competitive market.

From Idea to Production-Ready

Most no-code tools are “sandboxes” they are fun for prototyping but difficult to scale. Imagine.bo is built for the entrepreneur who needs a production-grade solution.

- Plain English Interface: You don’t need to know Python or C#. You describe your business logic in plain English, and the platform translates that into functional code.

- Unified Stack: It handles the complex interplay between the frontend (what the user sees), the backend (the logic), and the database (where info is stored).

- Scalability: Imagine.bo uses real engineering standards. Your application is designed to perform reliably whether you have 10 users or 10,000.

Validating Your AR/VR Vision

Because the development time is reduced by up to 90%, you can use Imagine.bo to test your market assumptions. Instead of spending six months building, you can launch a “Minimum Viable Product” (MVP) in days. Many are using this to turn ideas into apps and get real user feedback before investing heavily. If you’re ready to move from a concept to a live product, you can launch your production-ready application today.

Overcoming the “Uncanny Valley” in AI Environments

One of the biggest risks in building immersive worlds is the “Uncanny Valley” the point where digital humans or environments look almost real, but just “off” enough to be creepy. AI is helping creators skip over this valley.

- Neural Rendering: Instead of traditional light calculations, AI uses neural networks to predict how light should bounce off a surface, creating photorealistic shadows and reflections instantly.

- Procedural Soundscapes: AI can generate “spatial audio” that changes based on the materials in your virtual room. Sound will echo off a virtual concrete wall but be absorbed by virtual carpet, just as it would in real life.

By automating these high-fidelity details, no-code builders can achieve a level of polish that was previously reserved for multi-million dollar studios.

The Business Case for Immersive No-Code

Why should a founder care about this now? Because the ROI (Return on Investment) has finally turned positive for small teams.

- Reduced Training Costs: Companies using VR training report a 40% reduction in training time compared to classroom learning.

- Higher Conversion in Retail: AR “View in Room” features increase the likelihood of purchase by up to 11 times.

- Intellectual Property Creation: By building your own niche AR/VR tool, you are creating a valuable asset that can be sold, licensed, or used to dominate a local market.

The era of waiting for “the right time” to enter spatial computing is over. The tools are here, the AI is ready, and the code is optional.

Final Thoughts

The wall between “creatives” and “developers” is falling. We are moving into a world where your ability to imagine is the only real constraint. AI has taken over the heavy lifting of geometry and code, and no-code platforms have provided the canvas.

As the industry evolves, those who embrace these tools will be the ones defining the next generation of the internet. If you are ready to take the leap, you can build an ai app in 2025 with more ease than ever before.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build