The modern Security Operations Center (SOC) is currently trapped in a paradox. We have more sophisticated tools than ever before EDR, NDR, SIEM, SOAR yet security teams have never felt more overwhelmed. The “glitch in the matrix” isn’t a lack of data; it’s the sheer volume of it.

If you walk into a traditional SOC today, you won’t see analysts hunting sophisticated threats like they do in the movies. Instead, you’ll see brilliant human beings clicking through endless browser tabs, copy-pasting IP addresses into reputation checkers, and manually updating tickets. This is the “Manual Tax” of cybersecurity. It’s expensive, it’s exhausting, and it’s the reason why major breaches still happen despite million-dollar security stacks.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildIn this guide, we’re going to explore how the intersection of Generative AI and No-Code Automation is finally breaking this cycle and how modern platforms are making “Production-Grade AI” accessible to the people on the front lines.

The Invisible Crisis in Modern Security Operations

The Myth of the “Alert”

We often talk about “alert fatigue” as if it’s just a minor annoyance. It isn’t. It’s a systemic failure. When a dashboard glows red with 5,000 warnings, and 4,995 of them are noise, the human brain naturally begins to tune out. Psychologically, analysts stop looking for threats and start looking for reasons to close the ticket. This repetitive cycle is exactly why many organizations are beginning to automate your business tasks using AI tools to handle the low-level data entry that clogs up the workflow. When the noise floor is high, the “signal” of a real breach becomes almost impossible to detect until it’s too late. The cost of this noise is not just measured in time, but in the increased probability of a catastrophic oversight.

Why Skill Isn’t the Problem

There is a common misconception that cybersecurity failures happen because of a lack of expertise. The reality? Most SOC analysts are overqualified for the work they do daily. They are experts in deep packet analysis and forensics, yet they spend hours manually checking if an employee is on vacation before resetting a “suspicious” password.

When we force smart people to do manual work that should be automated, we don’t just lose time; we lose the people. Burnout is at an all-time high because the work has become a digital assembly line rather than a strategic hunt. We are currently using human brains as “connective tissue” between disconnected software APIs, which is a massive waste of human capital.

Why Traditional Automation Failed Us

For the last decade, we’ve been promised that Security Orchestration (SOAR) would save us. While it was a step in the right direction, it hit two massive walls that prevented true scalability.

1. The Engineering Bottleneck

Traditional automation tools are often “low-code” in name only. To build a truly effective playbook that connects your firewall to your identity provider, you usually need a dedicated developer who knows Python or complex API structures.

Small to mid-sized teams simply don’t have these resources. If it takes three weeks to write a script for a threat that is happening now, the automation is useless. This is why many founders are now looking for a no-code ai app builder that allows them to build logic without a background in software engineering. The inability to iterate in real-time makes traditional automation reactive rather than proactive.

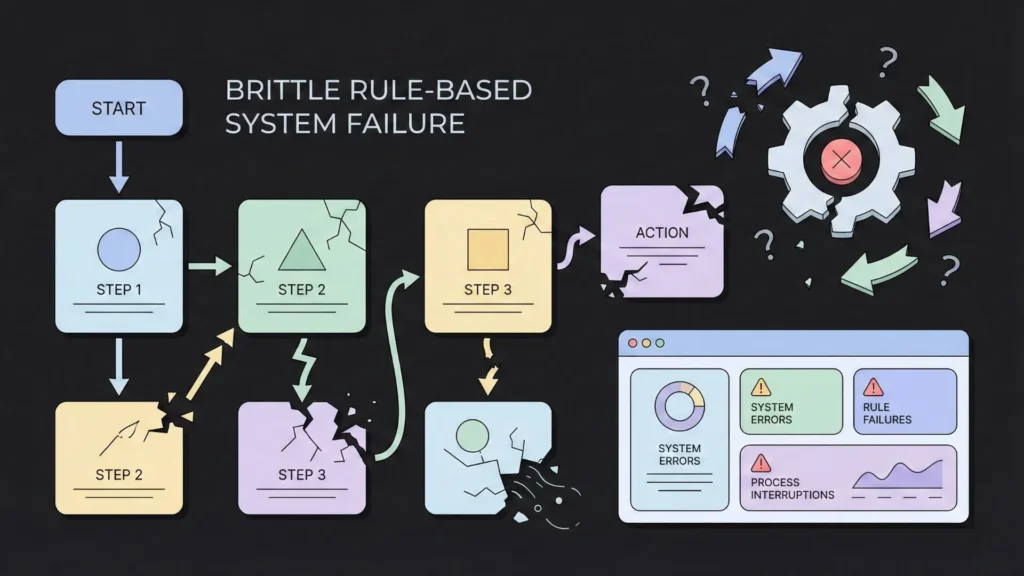

2. The “If-This-Then-That” Trap

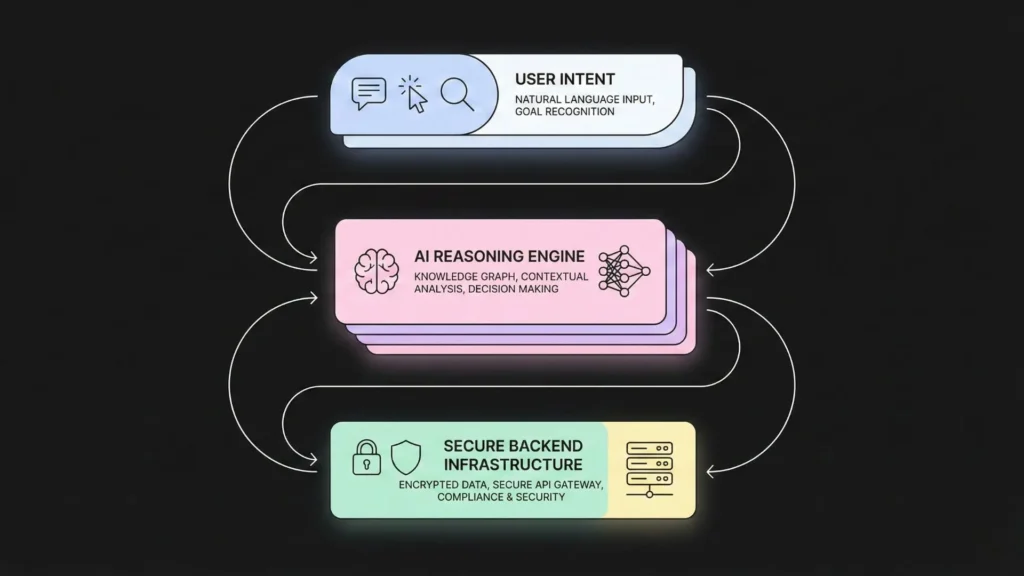

Standard automation is rigid. It follows a flowchart: If [Condition A] happens, then do [Action B]. But cyber threats are fluid. An attacker doesn’t follow a flowchart; they pivot. A rigid rule-based system breaks the moment a variable changes. These systems don’t understand context. They don’t know that a login from a new IP is “Safe” for a traveling CEO but “Critical” for a server admin who never leaves their home office. Without a reasoning engine, automation is just a faster way to make the same mistakes.

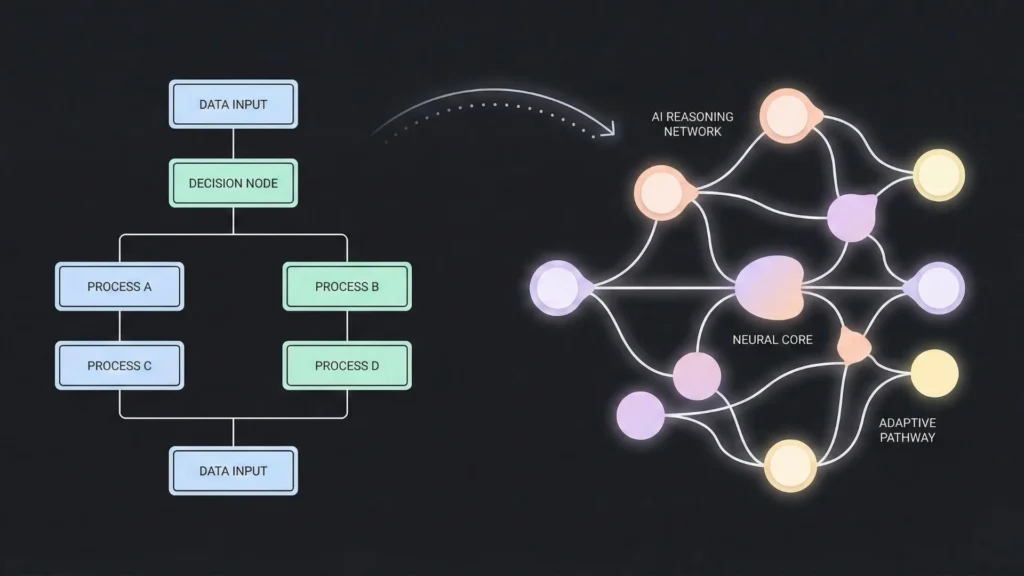

The AI Evolution From Rules to Reasoning

This is where the conversation changes. We are moving away from “Hard-Coded Logic” and toward “AI Reasoning.” AI-powered security is not just about faster automation; it is about smarter decisions.

Understanding Intent and Context

Unlike a script, an AI-powered system can ingest “unstructured” data. It can read a threat intelligence report, look at your internal logs, and reason through the risk. For instance, an AI doesn’t just see a “Failed Login.” It sees that the login was attempted on a legacy system with a username that is no longer in the HR directory, matching a known TOR exit node.

The ability to build these complex logic flows is a hallmark of the no-code ai development movement, which seeks to put the power of software creation into the hands of subject matter experts rather than just programmers. By allowing the AI to “think” through the steps of an investigation, we move from linear scripts to adaptive defense.

Reducing the Noise Floor

The goal of AI in cybersecurity isn’t to “handle more alerts.” If you just automate the processing of junk alerts, you’re just making the junk move faster. The goal is to eliminate the noise. AI acts as a high-fidelity filter. It suppresses the 90% of alerts that are statistically irrelevant, allowing the human analyst to focus on the three things that actually matter each day. This selective attention is what separates a world-class security posture from an average one.

No-Code The Democratization of Defense

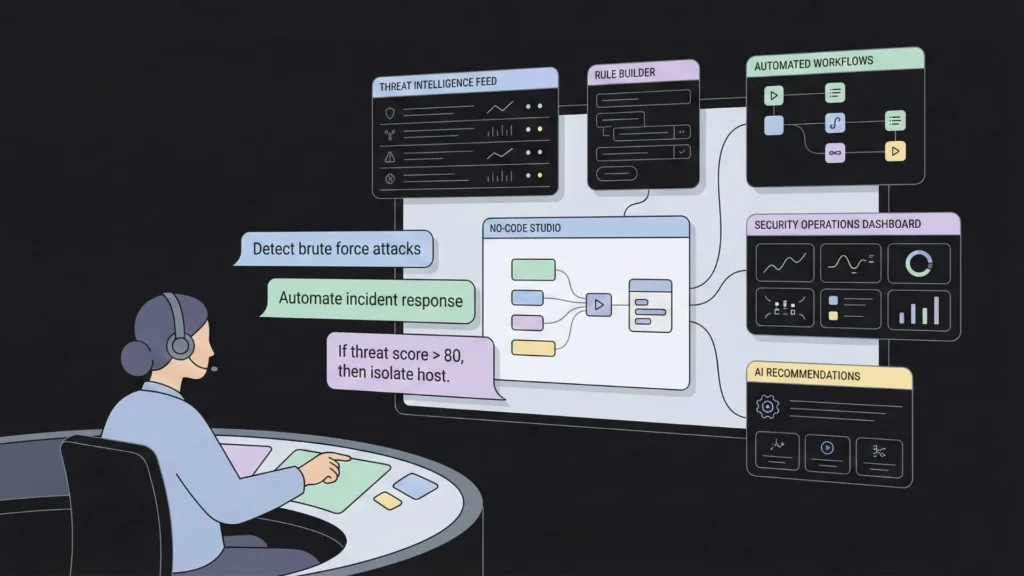

If AI is the “brain,” then No-Code is the “nervous system.” No-code development allows the people who actually understand the threats (the analysts) to build the tools they need without asking for permission from the IT or Dev departments.

The Speed of Defense

In cybersecurity, speed is the only metric that truly matters. MTTD (Mean Time to Detect) and MTTR (Mean Time to Respond) define the success of a security program. When a new zero-day vulnerability is announced, a security team using a no-code platform can build a remediation workflow in hours.

This speed is why many teams are interested in learning how to build an ai powered tool over a short period. In the gap between a threat emerging and a patch being deployed, a custom-built automation can be the difference between a “close call” and a catastrophic breach. No-code allows defense to move at the speed of the threat.

Imagine.bo Where Sophistication Meets Simplicity

While many no-code tools are built for simple tasks like moving rows in a spreadsheet, Imagine.bo is a platform designed for Enterprise-Grade AI Reasoning. It functions as an AI-driven no-code app and workflow builder that can be applied to complex domains, including cybersecurity operations.

How it Works: “Describe, Don’t Program”

The magic of Imagine.bo lies in its ability to translate human intent into machine-grade logic. You don’t “drag and drop” 500 boxes. You describe the workflow in plain English. For example, you might describe a system that monitors logs for permission changes and reverts them if they don’t match an approved ticket.

Because the platform is so versatile, many users find it helpful to follow an ai app development guide to understand how to structure these prompts for maximum reliability and security. If you are ready to put these concepts to work, you can start building your custom security workflow immediately by simply describing your requirements.

Why This Approach Works for Security:

- Professional Standards: Imagine.bo generates logic that follows real engineering standards. In a security environment, where a “crash” could mean a blind spot, reliability is non-negotiable.

- Agile Adaptation: Threats evolve daily. You can tweak your “app” as easily as editing a sentence, ensuring you aren’t locked into a vendor’s rigid roadmap.

- Compliance-Ready: Security isn’t just about stopping hackers; it’s about proving you stopped them. Imagine.bo allows for the creation of audit-ready logs that keep compliance officers happy.

Practical Use Cases for Security Teams

To understand the power of this approach, let’s look at three specific ways you can deploy AI-driven no-code today to protect your digital assets.

1. Automated Alert Triage & Enrichment

An analyst often spends 15 minutes per alert just gathering data checking IP locations, reputation scores, and user history. You can build an app that “pre-investigates” every alert by hitting external APIs and checking internal directories automatically.

By the time an analyst opens a ticket, they see a full “Investigation Summary” already written by the AI. This is a great example of how ai is redefining app development by shifting the focus from manual data collection to high-level strategic analysis. It transforms the analyst from a data-gatherer into a high-level decision-maker.

2. Incident Response “War Room” Automation

During a breach, communication often breaks down. People are scattered across Slack, email, and Zoom. A no-code workflow can trigger the moment a “Critical” incident is flagged, automatically creating a dedicated Slack channel, inviting the on-call team, and pulling technical data into a central dashboard.

This allows for a faster startup launch for new security protocols, ensuring that the team is focused 100% on the fire and 0% on the administrative logistics of the response. Coordination is the silent killer during a crisis; automation fixes it.

3. Internal Security Dashboards

Design custom dashboards that unify logs, alerts, and performance metrics without relying on multiple disconnected tools. Most security tools have terrible UI/UX, making it hard to find what you need.

By building your own interface, you ensure the most critical data is always front and center. This approach helps teams avoid critical mistakes when building because it allows for a tailor-made experience that matches the specific needs of your organization’s infrastructure. Visibility is the foundation of defense.

Deep Dive The Architecture of AI-Powered No-Code

To truly appreciate the power of platforms like Imagine.bo, we must look at what happens “behind the curtain.” Traditional no-code often creates “shadow IT” unreliable apps that lack security. AI-powered no-code is the opposite.

Generating Clean Backend Logic

When you describe a workflow to an AI, it isn’t just creating a surface-level visual representation. It is architecting a backend. It generates the database schemas, the API endpoints, and the security protocols necessary to handle sensitive data. This means your security tool isn’t just a prototype; it’s a production-grade asset that can handle thousands of requests per second.

Cloud-Native Deployment

Security automation must be resilient. Systems built via AI no-code are typically deployed on cloud-native architectures (like AWS or Google Cloud). This ensures that even if your local network is under a DDoS attack, your security automation tools remain online and functional. This high-availability is critical for businesses that cannot afford a single second of downtime.

Data Sovereignty and Privacy

In cybersecurity, where you store your data is just as important as how you protect it. Modern no-code platforms allow you to define exactly where your data lives. This is vital for meeting GDPR, CCPA, or HIPAA requirements. You can automate your security without ever sending sensitive logs to a third-party black box that you don’t control.

The Future is “System-Centric,” Not “Tool-Centric”

We have spent twenty years buying “tools.” We have a tool for email, a tool for the cloud, and a tool for the endpoint. The problem is that these tools don’t talk to each other. The future of cybersecurity isn’t a new “blink-y light” box in the server room; it is a Unified Logic Layer. By using AI-powered no-code platforms, we are essentially building a “Custom Security Operating System” tailored to our specific organization. We are moving from a world where we adapt our processes to our tools, to a world where our tools adapt to our processes. This shift is part of the broader no-code revolution that is making complex software architecture accessible to everyone. We are no longer limited by what a vendor thinks we need; we are only limited by our own operational imagination.

Final Thoughts: It’s About Human Empowerment

The most common fear regarding AI is that it will replace the human analyst. The reality is the opposite. AI is here to rescue the analyst. By removing the “digital manual labor,” we allow security professionals to return to what they are best at: Critical Thinking and Strategy.

Platforms like Imagine.bo are the bridge. They take the immense power of Enterprise AI and put the steering wheel in the hands of the security team. You don’t need to be a software engineer to build world-class security systems anymore; you just need to know what a “good” security process looks like.

If you’re ready to start your journey, you can build your ai app in 2025 and join the thousands of professionals who are reclaiming their time and focus through intelligent automation. To begin your first project, you can access the no-code builder here and turn your security ideas into production-ready systems today. The future of security operations is not more alerts it is better systems.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build