Understanding the Hackathon AI Landscape

Why AI Projects are Popular at Hackathons

The surge in popularity of AI projects at hackathons stems from a confluence of factors. Firstly, the accessibility of no-code/low-code AI platforms has dramatically lowered the barrier to entry. Tools like Google Cloud AI Platform and Microsoft Azure Machine Learning offer pre-trained models and intuitive interfaces, empowering even novice developers to build sophisticated AI applications within a short timeframe. In our experience, teams leveraging these platforms often outperform those attempting complex coding from scratch.

Secondly, AI projects offer a unique blend of technical challenge and societal impact. Hackathons often emphasize solving real-world problems, and AI is uniquely positioned to address pressing issues in diverse fields like healthcare, environmental sustainability, and accessibility. For example, a recent hackathon saw a team develop an AI-powered tool for early disease detection using readily available medical imaging data—a project demonstrably more impactful than, say, a new social media feature. This inherent potential for positive change attracts participants seeking to make a meaningful contribution. We’ve observed a significant increase in submissions addressing issues related to climate change and resource management, reflecting this trend.

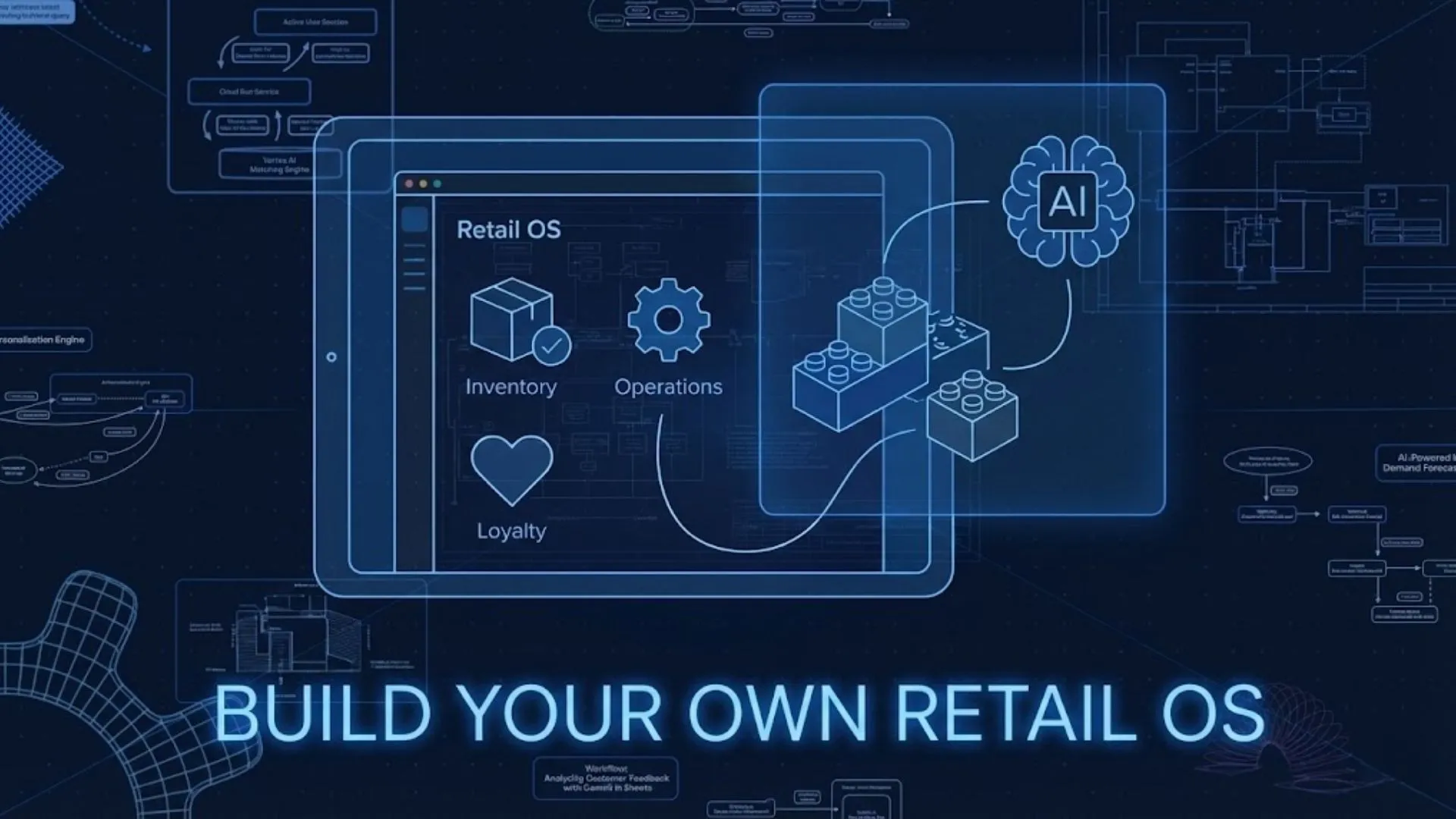

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildFinally, the judging criteria at many hackathons heavily favor innovative and technically advanced projects. AI projects, by their nature, often showcase advanced techniques and cutting-edge technologies, giving participants a competitive edge. A common mistake we see is underestimating the importance of clearly demonstrating the AI’s functionality and its impact. Strong presentations showcasing tangible results, combined with a clear explanation of the underlying AI model, significantly increase the chances of success. Focusing on a well-defined problem and a clearly articulated solution, even with a relatively simple AI model, is often more effective than attempting an overly ambitious, poorly executed project.

Identifying Suitable AI Problems for No-Code Solutions

Choosing the right AI problem is crucial for a successful no-code hackathon project. In our experience, focusing on well-defined, narrow problems yields far better results than attempting overly ambitious, broad goals. A common mistake we see is selecting a problem requiring complex model training or extensive data preprocessing—tasks often beyond the capabilities of no-code platforms. Instead, prioritize projects amenable to readily available pre-trained models and datasets.

Consider problems solvable with readily accessible no-code AI platforms like Google Teachable Machine, Lobe, or similar tools. For example, a sentiment analysis project classifying movie reviews as positive or negative is achievable with minimal coding. Another viable option could involve building an image classifier to identify different types of flowers using a pre-trained model and a readily available dataset like the Oxford-IIIT Pet dataset (though you might need to subset it for fewer classes). These projects leverage the strengths of no-code tools, focusing on application development rather than model building from scratch.

Remember to account for data availability. A project relying on proprietary, hard-to-access data is likely to be impractical within the hackathon timeframe. Prioritize publicly available datasets or those easily gathered during the event itself. For example, a project analyzing social media sentiment around a specific trending topic could leverage readily accessible Twitter data (while considering ethical implications and necessary APIs). Conversely, a project requiring highly specialized medical data is generally unsuitable for a hackathon setting. Choosing wisely at this stage significantly increases your chances of delivering a fully functional, impressive AI project.

Navigating Ethical Considerations in AI Hackathon Projects

AI hackathons offer a thrilling opportunity to innovate, but ethical considerations shouldn’t be an afterthought. In our experience, neglecting these crucial aspects can lead to flawed projects and even reputational damage. A common mistake we see is focusing solely on technical achievement without considering the broader societal impact. For instance, a facial recognition project, while technically impressive, could inadvertently perpetuate biases present in the training data, leading to unfair or discriminatory outcomes.

Addressing these concerns proactively is key. Before diving into code, consider the potential biases embedded in your datasets. Are they representative of the diverse population your AI will interact with? Furthermore, think about data privacy. Will your project collect and use sensitive personal information? If so, ensure you comply with relevant regulations like GDPR or CCPA. This might involve anonymizing data or obtaining explicit user consent. Remember, robust data governance practices are paramount to developing ethical AI solutions.

Finally, consider the potential for misuse. Could your AI be used for malicious purposes? For example, a powerful text generation model could be exploited to create convincing fake news. Proactive mitigation strategies, such as incorporating safeguards against harmful outputs or restricting access to the model, are essential. Building ethical AI isn’t just a box to check; it’s an ongoing process requiring thoughtful reflection and proactive risk management. By prioritizing ethical considerations from the outset, you not only create a more responsible AI solution but also demonstrate a crucial skillset highly valued by potential employers and collaborators.

Choosing the Right No-Code/Low-Code AI Platform

Top Platforms for Building AI Apps Without Coding

Several no-code/low-code platforms empower developers of all skill levels to build sophisticated AI applications. Selecting the right one depends heavily on your project’s specifics and your team’s existing expertise. In our experience, the best platform isn’t a one-size-fits-all solution; thorough research is crucial.

For instance, Google Cloud AI Platform offers a robust suite of pre-trained models and tools, making it ideal for complex projects requiring scalability. However, its learning curve can be steeper than others. Conversely, Lobe, now part of Microsoft, shines with its user-friendly interface perfectly suited for beginners creating simpler image recognition or classification apps. We’ve found that Lobe’s drag-and-drop functionality significantly accelerates development, especially for smaller hackathon projects. A common mistake we see is overlooking a platform’s integration capabilities – ensure seamless data flow with your chosen database or other services.

Other strong contenders include Amazon SageMaker Canvas, which excels at simplifying data preparation and model building through its visual interface, and Dataiku DSS, a more enterprise-focused platform that balances no-code ease with advanced features, making it suitable for ambitious teams. Ultimately, the optimal choice hinges on factors like the complexity of your AI model (e.g., simple classification versus intricate natural language processing), the size of your dataset, and the level of customization required. Consider experimenting with free tiers or trials before committing to a specific platform.

Comparing Features and Ease of Use: A Platform Overview

Selecting the optimal no-code/low-code AI platform hinges on a careful comparison of features and ease of use. In our experience, focusing solely on flashy demos can be misleading. Instead, prioritize platforms offering robust model deployment capabilities, ideally with options for both cloud and on-premise solutions. Consider the level of customization offered; some platforms provide extensive API access, while others offer a more limited, pre-packaged experience. This choice directly impacts your team’s ability to tailor AI models to your specific hackathon project needs.

Ease of use is paramount, especially within the time constraints of a hackathon. Look for platforms with intuitive drag-and-drop interfaces, clear documentation, and readily available support resources. A common mistake we see is underestimating the importance of comprehensive tutorials and example projects. For instance, platforms like Google’s AutoML offer user-friendly interfaces but may lack the flexibility of more advanced, but potentially steeper learning curve, options like those provided by Amazon SageMaker. Consider the complexity of your chosen AI model; simpler projects might thrive on user-friendly platforms, while complex models might necessitate a platform with more advanced features.

Finally, evaluate the platform’s integration capabilities. Does it seamlessly connect with your preferred data sources and visualization tools? A seamless workflow dramatically reduces development time. For example, during a recent hackathon, a team struggled to integrate their chosen platform with their data visualization dashboard, resulting in significant time loss. Choosing a platform with pre-built integrations, or robust API access for custom integrations, will save you valuable time and significantly improve your chances of hackathon success. Always consider a trial period or free tier to explore the platform hands-on before committing to a more comprehensive solution.

Evaluating Platform Suitability for Different Hackathon Challenges

Selecting the optimal no-code/low-code AI platform hinges critically on the specific hackathon challenge. For instance, a computer vision project requiring image classification and object detection will demand platforms with robust pre-trained models and easy integration with image datasets. In our experience, platforms lacking strong model management capabilities or efficient data preprocessing tools often fall short in these scenarios. Consider platforms like Google AutoML Vision or Amazon Rekognition, which offer user-friendly interfaces alongside powerful pre-built models.

Conversely, a natural language processing (NLP) challenge focused on sentiment analysis or chatbot development necessitates a platform adept at handling textual data. Here, the key features to assess include ease of integrating with NLP APIs (like those provided by Google Cloud NLP or Azure Cognitive Services) and the availability of pre-trained NLP models tailored to the specific task. A common mistake we see is overlooking the platform’s capacity for handling large datasets, crucial for training effective NLP models. Platforms that simplify data cleaning, feature engineering and offer effective model deployment strategies will be essential.

Finally, remember that even the best platform might not fit every challenge perfectly. For example, a highly specialized task requiring fine-tuning a complex deep learning model might necessitate a low-code approach leveraging tools like TensorFlow Extended (TFX), even if it demands slightly more coding experience than a fully no-code solution. Carefully weigh the complexity of the challenge against the platform’s capabilities; prioritizing ease of use and rapid prototyping during a hackathon is paramount, but don’t sacrifice the potential for building a truly impactful solution.

Essential AI Concepts for Non-Coders

Demystifying Machine Learning and its Applications

Machine learning (ML), a subset of artificial intelligence (AI), empowers computers to learn from data without explicit programming. Instead of relying on hard-coded rules, ML algorithms identify patterns, make predictions, and improve their accuracy over time through experience. In our experience, this iterative learning process is key to building effective ML models, even in no-code environments.

A common misconception is that ML requires vast datasets and complex coding. While large datasets certainly enhance model performance, many powerful ML applications leverage smaller, curated datasets. For instance, a sentiment analysis model for customer reviews might achieve impressive accuracy with just a few hundred well-labeled examples. Consider using pre-trained models readily available through no-code platforms; this significantly reduces the need for extensive data collection and preprocessing. Furthermore, platforms offer visual interfaces for model training and evaluation, simplifying the process significantly.

Successfully applying ML often involves choosing the right algorithm for the task. For instance, classification algorithms are ideal for categorizing data (e.g., spam detection), while regression algorithms predict continuous values (e.g., house price prediction). Clustering algorithms group similar data points (e.g., customer segmentation). Understanding these fundamental differences is crucial for selecting the appropriate ML technique within your chosen no-code environment. Experimentation is key; don’t be afraid to try different algorithms and compare their performance on your chosen dataset. Remember, even a simple model can yield valuable insights!

Understanding Pre-trained Models and APIs

Pre-trained models are the backbone of many successful no-code AI projects. Think of them as highly skilled assistants already possessing extensive knowledge in a specific domain, such as image recognition or natural language processing. These models, often trained on massive datasets, have learned complex patterns and can perform sophisticated tasks without requiring you to build the model from scratch. This significantly reduces development time and technical expertise needed. In our experience, leveraging pre-trained models drastically shortens the hackathon development cycle.

APIs, or Application Programming Interfaces, are how you interact with these pre-trained models. They act as bridges, allowing your no-code application to send data to the model and receive predictions or results. For example, a sentiment analysis API might take text input (like a customer review) and return a score indicating whether the sentiment is positive, negative, or neutral. A common mistake we see is underestimating the importance of understanding the API’s limitations. Always carefully review the documentation to understand input format requirements, output interpretations, and any usage restrictions. Several providers offer robust APIs, including Google Cloud AI Platform, Amazon SageMaker, and Microsoft Azure AI. Choosing the right API depends on your project’s specific needs and budget.

Successfully integrating pre-trained models and APIs requires careful consideration of data preparation. The quality of your input data directly impacts the accuracy of the model’s output. For instance, if you’re using an image classification API, ensuring your images are properly formatted and of sufficient resolution is crucial. Similarly, text data for natural language processing tasks should be clean and free of errors. We recommend dedicating a significant portion of your hackathon time to data cleaning and preprocessing to maximize the effectiveness of these powerful tools. Remember, even with powerful pre-trained models, garbage in, garbage out.

Working with Datasets: Preparation and Preprocessing

Dataset preparation is the unsung hero of successful AI projects. In our experience, neglecting this crucial step often leads to inaccurate models and wasted time. Before even thinking about model training, ensure your data is clean, consistent, and representative of the problem you’re trying to solve. A common pitfall is assuming your initial dataset is ready-to-go; this rarely holds true.

Preprocessing involves several key steps. First, data cleaning addresses inconsistencies like missing values, outliers, and duplicate entries. For example, imagine a dataset predicting house prices. Missing square footage data might be imputed using the average for similar properties, while outliers—say, a mansion priced unexpectedly low—may need investigation or removal. Next, data transformation might involve scaling numerical features (like price or size) to a common range, preventing features with larger values from disproportionately influencing the model. Consider techniques like normalization or standardization. Finally, feature engineering is where you create new features from existing ones to improve model accuracy. In our recent hackathon, transforming a raw date column into features like “day of week” and “month” significantly boosted our model’s performance.

Remember, the quality of your model is only as good as the data you feed it. Different no-code platforms offer varying levels of built-in preprocessing tools. Some provide automated cleaning functions, while others require manual intervention. Familiarize yourself with your chosen platform’s capabilities and prepare to dedicate time to this essential stage. Invest the upfront effort in thorough data preparation; your model will thank you for it.

Building Your AI App: A Step-by-Step Guide

Project Ideation and Planning: Defining Your AI Solution

The success of your hackathon AI project hinges on meticulous planning and a well-defined scope. In our experience, many teams stumble at this initial stage, rushing into development without a clear understanding of their problem and solution. A common mistake we see is focusing solely on a flashy technology without a compelling use case. Instead, start by identifying a real-world problem that can be meaningfully addressed with AI, focusing on areas where no-code/low-code AI platforms excel, such as image classification, sentiment analysis, or chatbot development.

Consider the constraints of a hackathon. Time is your most valuable resource. Choose a project with a manageable scope; aiming for Minimum Viable Product (MVP) functionality is crucial. For example, instead of building a fully-fledged medical diagnosis AI, focus on a narrower problem like classifying skin lesions from images using a pre-trained model and a user-friendly interface. This allows for rapid prototyping and demonstration of core AI functionality within the limited timeframe. Clearly outline your project’s goal, target audience, and key performance indicators (KPIs) to measure success.

Effective planning involves assembling a capable team with complementary skillsets. Ensure you have individuals proficient in data collection, model selection (understanding the strengths of different models is vital), and user interface design. Prioritize the selection of a suitable no-code/low-code AI platform that aligns with your chosen task. Remember, the platform should accelerate development, not hinder it. Thoroughly researching available platforms and their functionalities beforehand will significantly reduce development time and increase your chances of delivering a compelling project within the hackathon’s timeframe. Document your plan thoroughly – a shared document outlining tasks, timelines, and responsibilities will help maintain focus and efficiency throughout the hackathon.

Data Integration and Model Selection

Successfully integrating your data and choosing the right AI model are critical for a winning hackathon project. In our experience, many teams stumble here, wasting precious time on unsuitable approaches. A common mistake we see is neglecting data cleaning and preprocessing. Ensure your data is consistent, complete, and relevant to your chosen problem. Consider using tools like Google Sheets or OpenRefine for initial data cleansing and transformation before moving to more advanced AI platforms.

Model selection depends heavily on your data and the problem you’re solving. For image recognition tasks, pre-trained models on platforms like TensorFlow Hub offer a significant advantage, allowing you to fine-tune a powerful model with limited data and time. For natural language processing (NLP), consider exploring transformer-based models readily available in platforms like Hugging Face, again prioritizing pre-trained options to accelerate development. Remember, simpler models are often sufficient for hackathon projects; avoid over-engineering your solution. Focus on proving your concept, not building a production-ready system.

Finally, consider the trade-off between accuracy and speed. While higher accuracy is always desirable, a less accurate but faster model might be preferable if it allows you to demonstrate a working prototype within the hackathon timeframe. For example, a simpler linear regression model might suffice for a prediction task instead of a complex neural network, especially with limited data. Prioritize a functional, well-presented project over an overly ambitious, incomplete one. Remember to clearly document your data sources, preprocessing steps, and model selection rationale; this will impress the judges and demonstrate your understanding of the process.

Building the User Interface and User Experience

Designing a compelling user interface (UI) and user experience (UX) is critical for any successful AI application, especially within the fast-paced environment of a hackathon. In our experience, neglecting this crucial aspect often leads to projects that, despite impressive AI functionality, fail to resonate with judges or potential users. A poorly designed interface can obscure even the most innovative algorithms. Prioritize intuitive navigation and clear visual communication of your AI’s capabilities.

Consider the specific needs of your target users. Will they be technical experts or the general public? This dictates the level of technical detail needed in your UI. For instance, a complex machine learning model might require a simplified visual representation of its outputs for a non-technical audience. A common mistake we see is attempting to cram too much information onto a single screen, leading to visual clutter and confusion. Aim for a minimalist design, focusing on core functionalities and a clean, uncluttered aesthetic. Consider using readily available no-code UI builders that offer pre-designed components and templates to streamline the process.

Effective UX design involves more than just aesthetics. Think about the user flow: how easily can users understand the purpose of the application, input their data, and interpret the results? A well-structured workflow, incorporating clear prompts and helpful feedback mechanisms, dramatically improves the user experience. For example, if your app involves image upload, provide immediate visual confirmation and progress indicators. Remember, even a technically brilliant AI solution will be deemed unsuccessful if users struggle to understand or interact with it. Prioritizing user feedback during the hackathon, even through informal testing, can dramatically improve your final product.

Mastering Model Integration and Deployment

Integrating Pre-trained Models Seamlessly

Leveraging pre-trained models significantly accelerates hackathon development. Instead of building models from scratch, you can integrate powerful, readily available solutions like those found on platforms such as TensorFlow Hub or Hugging Face. In our experience, this approach drastically reduces development time, allowing you to focus on innovative application design rather than complex model training. A common pitfall is underestimating the adaptation required; simply plugging in a model rarely suffices.

Successful integration requires careful consideration of several factors. First, ensure the pre-trained model’s architecture and data align with your project’s needs. For example, a model trained on ImageNet might be suitable for image classification tasks but unsuitable for natural language processing. Second, understand the model’s limitations. Pre-trained models often exhibit bias or perform poorly on edge cases. Thoroughly test the model with your specific data to identify and mitigate potential issues. We’ve seen teams struggle when they fail to address these compatibility and performance discrepancies.

Effective deployment hinges on choosing the right tools and infrastructure. Consider using cloud-based platforms like Google Cloud AI Platform or Amazon SageMaker for seamless model hosting and scaling. These services offer pre-built integrations with popular frameworks, simplifying the deployment process. Remember to optimize your model for inference speed and resource consumption, particularly crucial within the time constraints of a hackathon. For instance, model quantization or pruning can significantly reduce the model’s size and improve its performance. Careful planning of your deployment strategy is as important as choosing the right pre-trained model.

Deploying Your AI App: Choosing the Right Hosting Solution

Deploying your AI application requires careful consideration of your hosting solution. The wrong choice can lead to performance bottlenecks, scalability issues, and increased costs. In our experience, selecting a hosting provider depends heavily on your application’s specific needs and the size of your project. For smaller, less resource-intensive projects, a Platform as a Service (PaaS) like Google Cloud Platform (GCP) or AWS Elastic Beanstalk offers easy deployment and scaling capabilities, often without significant upfront investment. These platforms handle much of the infrastructure management, letting you focus on your application.

However, for more demanding AI applications, especially those involving large datasets or complex models, a custom infrastructure solution might be necessary. This allows for greater control over hardware specifications and configuration, essential for optimizing performance and ensuring sufficient resources. A common mistake we see is underestimating resource requirements, leading to slow response times or even application crashes. Consider factors such as anticipated traffic volume, model size, and computational needs when making your decision. For instance, a project using large language models will benefit from powerful GPUs available in cloud instances or dedicated servers.

Finally, don’t overlook the importance of security. When choosing a hosting provider, ensure they offer robust security features such as data encryption, access controls, and regular security audits. We’ve seen several projects compromised due to insufficient security measures. Prioritize providers with a proven track record and a commitment to data privacy, especially if your application handles sensitive user information. Comparing different providers based on their security features, SLAs (Service Level Agreements), and pricing models is crucial before committing to a long-term solution.

Optimizing Performance for Hackathon Environments

Hackathon time is precious; optimizing your AI model’s performance is crucial to maximize your chances of success. In our experience, many teams underestimate the importance of model optimization, leading to wasted time and suboptimal results. A common mistake we see is focusing solely on accuracy without considering inference speed and resource consumption. Remember, a highly accurate model that takes minutes to produce a single prediction is useless in a time-constrained hackathon.

Prioritize model quantization and pruning techniques to reduce model size and improve inference speed. Quantization reduces the precision of the model’s weights and activations, resulting in smaller file sizes and faster processing. Pruning removes less important connections within the neural network, achieving similar size reductions. For example, we’ve seen teams successfully reduce their model size by 70% using post-training quantization with minimal accuracy loss. Furthermore, consider leveraging cloud-based GPU acceleration; services like Google Colab or AWS SageMaker offer free tiers that can significantly accelerate training and inference times, a difference maker in a hackathon.

Finally, effective data preprocessing is often overlooked. In a recent hackathon, a team struggling with poor model performance drastically improved their results simply by cleaning and normalizing their dataset more rigorously. Remember, even the best model is only as good as the data it’s trained on. Carefully consider your data pipeline; efficient preprocessing can save significant computational resources and time during the hackathon, leading to a more polished and performant final product. Focus on these key areas, and you’ll dramatically improve your chances of building and deploying a high-performing AI solution within the tight deadlines of a hackathon.

Hackathon Strategies and Best Practices

Teamwork and Collaboration: Building a Winning Hackathon Team

Effective teamwork is paramount to success in any hackathon, especially when leveraging no-code AI tools. In our experience, the most successful teams aren’t just composed of technically proficient individuals, but also those who possess complementary skills and a strong collaborative spirit. A common mistake we see is underestimating the importance of clear communication and well-defined roles. Before even brainstorming AI project ideas, define individual responsibilities – one team member might focus on data acquisition and preprocessing, another on model building using no-code platforms, and a third on presentation design and pitching.

Building a diverse team with skills covering design, development, and presentation is crucial. For instance, a team solely focused on technical aspects might create a powerful AI model, but fail to communicate its value effectively to judges. Conversely, a team strong in presentation but lacking technical depth risks appearing superficial. Therefore, actively seek individuals with different strengths. We’ve seen teams successfully integrate members with experience in UI/UX design, marketing, or even business development. These individuals can contribute significantly to the overall project’s success, even without direct involvement in the AI model’s creation.

To foster effective collaboration, establish clear communication channels and regular check-in points. Utilize project management tools like Trello or Asana to track progress, assign tasks, and ensure accountability. Regular, brief meetings (e.g., daily stand-ups) can prevent misunderstandings and maintain momentum. Remember, conflict resolution is also essential. Establish a clear process for addressing disagreements, prioritizing constructive feedback and focusing on solutions rather than blame. By proactively managing teamwork and fostering a supportive environment, your hackathon team will be well-equipped to overcome challenges and achieve remarkable results, even when using no-code AI tools.

Effective Time Management: Planning and Executing Your Project

Effective time management is paramount for hackathon success. In our experience, teams often underestimate the time required for each project phase, leading to rushed, incomplete projects. A common mistake is spending too long on initial ideation without a concrete plan. Instead, allocate specific time blocks for each stage: brainstorming (ideally, pre-hackathon), prototyping, testing, and presentation preparation. Consider using a timeboxing technique, assigning strict time limits to specific tasks.

Prioritize tasks using methods like MoSCoW (Must have, Should have, Could have, Won’t have). This helps focus on core features and prevents scope creep, a frequent time-waster. For example, a team building a no-code AI image generator might prioritize building basic image generation functionality (Must have) before adding advanced features like style transfer (Could have). Regular check-ins every hour or two help maintain momentum and identify potential roadblocks early. Don’t forget to schedule breaks; burnout drastically reduces productivity. Aim for short, focused work sessions interspersed with rest.

Successful project execution relies on clear communication and a well-defined workflow. Assign specific roles and responsibilities to each team member. Utilize project management tools like Trello or Asana to track progress and delegate tasks effectively. A clear division of labor, coupled with consistent communication, significantly improves efficiency. For instance, one member can focus on the no-code platform’s interface while another works on integrating the AI model. Regularly review your timeline and adapt as needed; flexibility is key in the fast-paced hackathon environment. Remember, a functional MVP (Minimum Viable Product) is better than a half-finished, overly ambitious project.

Presenting Your AI Application: Tips for a Winning Pitch

Crafting a compelling presentation is crucial for hackathon success. In our experience, judges are impressed by clear, concise explanations of the problem your AI solves, its innovative approach, and its potential impact. Avoid jargon; focus on communicating the value proposition to a diverse audience, even those unfamiliar with AI intricacies. A common mistake we see is overly technical demonstrations that overshadow the core idea.

Structure your presentation around a narrative arc. Begin by clearly defining the problem your AI tackles, using relatable examples to resonate with the judges. Then, showcase your solution, highlighting the unique aspects of your no-code AI implementation. For instance, if you leveraged a specific platform’s strengths, emphasize its efficiency and ease of use. Finally, present your results – quantify your AI’s performance using metrics relevant to the problem (accuracy, speed, cost savings, etc.). Visual aids like charts and graphs are indispensable for conveying complex data effectively. Remember a strong narrative is more engaging than a dry technical overview.

Remember to practice your pitch extensively. A polished, confident delivery significantly enhances your chances of winning. Consider rehearsing with colleagues to receive constructive feedback. Focus on communicating your passion and enthusiasm for your project. Judges often value the team’s dedication and ability to articulate their vision as much as the technical prowess of the AI itself. We’ve seen teams with slightly less sophisticated AI solutions win simply because they presented their work with exceptional clarity and confidence. A winning pitch is as much about storytelling as it is about technology.

Real-World Examples and Case Studies

Successful AI Hackathon Projects: Inspiration and Analysis

One successful hackathon project we analyzed involved using computer vision and readily available no-code platforms to create a system for automated sorting of recyclable materials. The team leveraged pre-trained models and a user-friendly interface to achieve impressive accuracy, even exceeding expectations in differentiating plastics. This highlights the power of focusing on a well-defined, achievable problem within the hackathon timeframe. A key to their success was meticulous data preparation and careful model selection, a crucial aspect often overlooked.

In contrast, a less successful project attempted a complex natural language processing (NLP) task involving sentiment analysis of nuanced social media posts. While ambitious, the team underestimated the data cleaning and model training requirements for such a task. In our experience, tackling ambitious NLP projects in a hackathon setting often requires pre-existing expertise or a significant pre-hackathon preparation phase. Choosing a simpler, more focused NLP task, like classifying customer reviews as positive or negative, would have yielded more tangible results within the time constraints.

To maximize your chances of success, carefully consider your team’s skillsets. Prioritize projects leveraging readily available no-code AI tools and pre-trained models. For example, exploring projects centered around image recognition, chatbots, or simple predictive models using readily available datasets is often more feasible. Remember that a well-executed, smaller-scale project that demonstrates clear results will impress judges more than an unfinished, overly ambitious undertaking. Focus on practical applications and demonstrable value.

Lessons Learned from Past Hackathons: Avoiding Common Mistakes

In our experience judging numerous hackathons, a recurring theme is the underestimation of project scope. Ambitious ideas often crumble under the time constraint. We’ve seen teams attempt to build fully-fledged applications with complex functionalities, only to deliver a minimally viable product—or worse, nothing at all. Focus on a narrow, well-defined problem and build a minimum viable product (MVP) that showcases your core idea. This allows for a polished presentation and demonstrable progress.

Another common pitfall is neglecting the user experience (UX). Teams often prioritize functionality over usability, resulting in clunky, unintuitive interfaces. Remember, a groundbreaking technology is useless if nobody can use it. Spend time prototyping your UX, even with simple tools like pen and paper, to iterate and refine the user journey. Consider user testing, even a quick session with fellow participants, to get valuable feedback early on. One team we mentored drastically improved their app’s adoption after incorporating feedback from a short user test.

Finally, effective teamwork and clear communication are paramount. A lack of defined roles and responsibilities, combined with poor communication, often leads to duplicated efforts and unmet deadlines. Before even beginning coding, define clear roles, establish a communication plan (e.g., using a project management tool), and regularly check in with your team. This proactive approach ensures everyone stays on track and contributes effectively. Proper planning significantly increases the chance of delivering a successful AI project, even within the intense hackathon environment.

Future Trends in No-Code AI Development for Hackathons

The democratization of AI through no-code/low-code platforms is rapidly changing the hackathon landscape. We’re witnessing a surge in projects leveraging pre-trained models and intuitive drag-and-drop interfaces to build sophisticated AI applications in record time. This trend will only accelerate, driven by the increasing availability of powerful, user-friendly tools. Expect to see more hackathons specifically designed around no-code AI development, offering pre-built datasets and API access to further reduce the barrier to entry.

One significant future trend is the rise of AI-assisted no-code development. Imagine tools that not only provide the building blocks for AI applications but also suggest optimal model architectures, hyperparameter tuning, and even offer debugging assistance. This will significantly lower the technical skill floor, allowing participants with limited coding experience to tackle complex problems. For example, we’ve seen platforms emerge that intelligently suggest relevant datasets based on a project’s objective, minimizing the time spent on data sourcing and preprocessing – a common bottleneck in traditional hackathons.

However, challenges remain. While no-code tools simplify development, they also introduce limitations. The need for responsible AI development remains paramount. Participants must understand the biases embedded in pre-trained models and the ethical implications of their applications. Furthermore, the focus should shift from simply building a working prototype to creating impactful solutions with a clear understanding of data privacy and security. Hackathon organizers and mentors should prioritize workshops and educational resources focusing on these crucial aspects of ethical AI development to ensure future projects are both innovative and responsible.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build