The narrative around artificial intelligence has shifted dramatically. We have moved past the phase of using AI merely for content generation or simple chatbots. We are now entering an era where unlocking the power of AI means handing over the reins of complex software engineering to intelligent systems capable of architecting entire digital ecosystems. For founders, product managers, and visionaries, this is the ultimate equalizer. It means the ability to bypass the traditional, resource-heavy development cycle and go straight to market with a product that is robust, secure, and scalable. This isn’t just about automation; it is about redefining the very nature of how software is brought into existence.

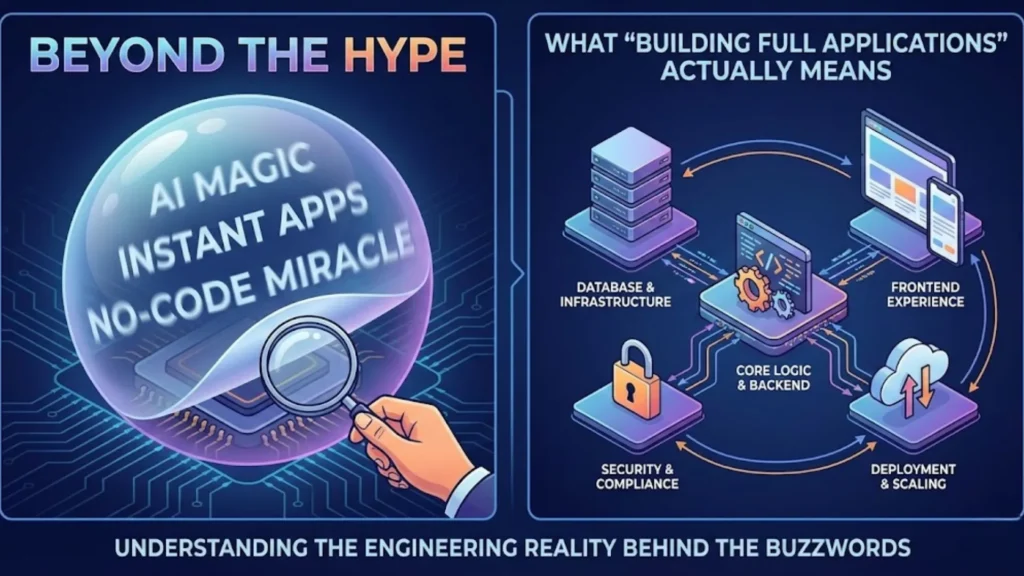

Beyond the Hype: What “Building Full Applications” Actually Means

To understand the magnitude of this shift, we must first clarify what we mean by a “full application.” In the world of SaaS and digital products, a real application is not just a pretty landing page or a disconnected user interface. It is a living, breathing organism composed of multiple synchronized layers.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

BuildYour guide to building full applications requires understanding these four critical pillars, all of which Imagine.bo handles autonomously:

- The Frontend (The Face): This is what your users interact with—responsive, accessible, and intuitive designs built with modern frameworks like React or Vue. It requires an understanding of frontend development principles to ensure a seamless user experience (UX).

- The Backend (The Brain): The server-side logic that processes requests, handles business rules, and ensures the app actually does something. This is often where no-code platforms fall short, but where true AI engineering shines.

- The Database (The Memory): Secure, scalable storage (SQL or NoSQL) that manages user data, relationships, and complex queries. A robust SaaS architecture depends entirely on how well this data is structured.

- Deployment & Security (The Shield): The often-overlooked necessity of cloud-native hosting, SSL encryption, and authentication protocols to keep your users safe.

Why Most No-Code Tools Fail to Deliver Real-World Products

The market is flooded with “app builders,” but a closer look reveals a significant gap. Many tools are excellent for prototyping but crumble when pushed to production. They operate on a surface level, allowing you to drag and drop visual elements, but they lack the underlying engineering logic to support a growing business.

The limitations of these “toy” generators include:

- Lack of Logic: They can build a form, but they cannot determine why that form should trigger a specific database update or third-party API call.

- Scalability Issues: As your user base grows, brittle architectures often lead to performance bottlenecks. Scaling SaaS with automation tools requires a foundation built for volume, not just for show.

- Security Vulnerabilities: Many low-code platforms treat security as an afterthought. However, securing AI-generated web apps must be foundational, ensuring compliance with standards like GDPR from day one.

The Imagine.bo Difference: AI Reasoning vs. Simple Automation

This is where Imagine.bo diverges from the pack. We don’t just use generative AI; we utilize AI reasoning.

Simple automation follows a script. AI reasoning understands intent and engineering standards. Imagine.bo operates with the mindset of a Senior Software Development Engineer (SDE). When you ask for a feature, it doesn’t just paste code together; it architects a solution based on best practices.

- Contextual Understanding: If you are building a marketplace, the AI understands you need a payment gateway, a dispute resolution mechanism, and a user review system. It anticipates these needs.

- SDE-Level Standards: The generated code follows industry best practices for modularity and cleanliness. It eliminates the “spaghetti code” often associated with visual programming.

- Self-Healing Architecture: If the AI detects a conflict between your database schema and your frontend UI, it proactively resolves the logic error before deployment, similar to creative debugging strategies used by expert developers.

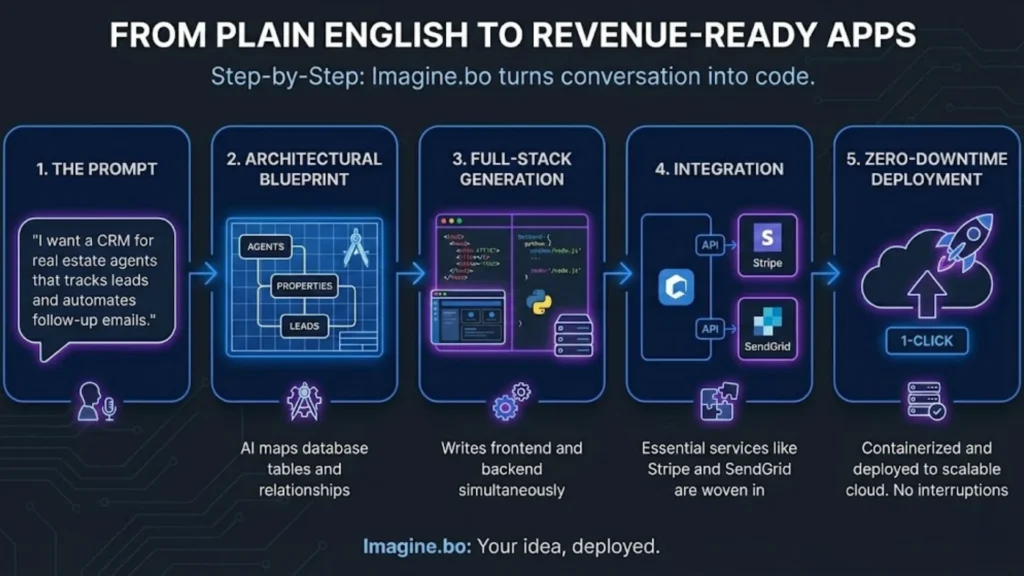

Step-by-Step: From Plain English to Revenue-Ready Apps

Building a complex application used to take months of back-and-forth with development teams. With Imagine.bo, it becomes a conversation. Here is how you turn a prompt into a product:

- The Prompt: You describe your vision simply. For example, “I want to build a CRM for real estate agents that tracks leads and automates follow-up emails.”

- Architectural Blueprint: The AI analyzes the request and maps out the necessary database tables (Agents, Properties, Leads) and relationships, effectively performing the role of a technical lead.

- Full-Stack Generation: It simultaneously writes the frontend and the backend, ensuring they communicate perfectly. This is the core of building web apps with AI.

- Integration: Essential third-party services—like Stripe for payments or SendGrid for emails—are woven into the backend logic automatically.

- Zero-Downtime Deployment: With one click, the application is containerized and deployed to a scalable cloud environment. Zero downtime deployment ensures your users never face interruptions.

Empowering Every Creator: Key Use Cases

Unlocking the power of AI for app development levels the playing field across industries.

- For Startup Founders: You can now launch an MVP in days, not months. This allows you to validate your market hypothesis before burning cash on expensive engineering hires.

- For SMBs: Digitize manual workflows—like inventory management or employee scheduling—by creating custom internal tools.

- For Designers: Bring your aesthetic vision to life with functional logic. You no longer need to rely on a developer to translate your designs; you can build high-fidelity portfolios that are actual applications.

- For Marketers: Build micro-SaaS tools or interactive lead magnets that drive engagement far better than a PDF whitepaper. Personalized marketing apps are the new standard for lead gen.

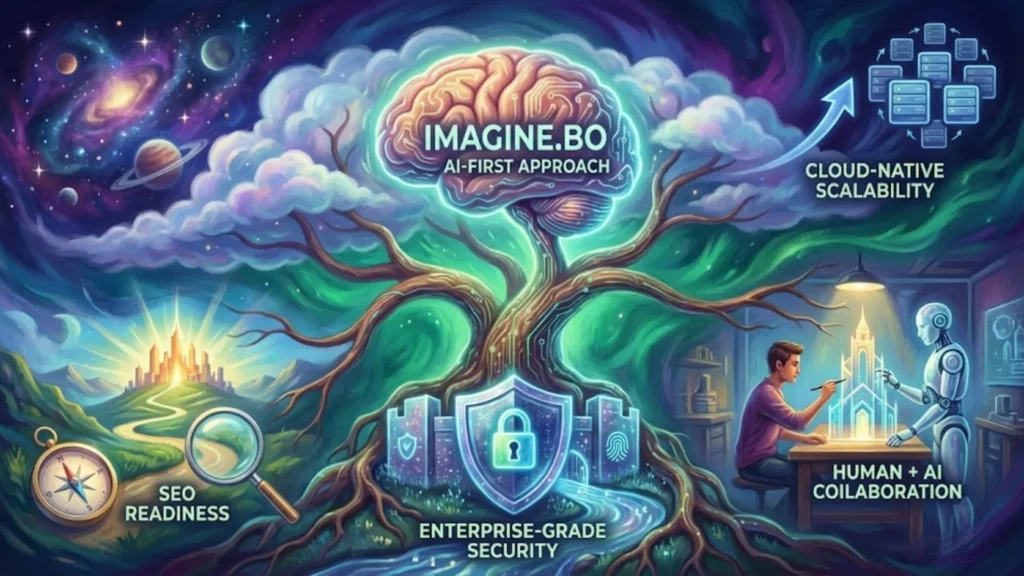

Core Benefits of an AI-First Approach

Choosing Imagine.bo means prioritizing features that drive business growth rather than getting bogged down in technical debt.

- Enterprise-Grade Security: Automated compliance with data privacy standards and secure authentication layers built-in by default.

- Cloud-Native Scalability: Applications are built to handle ten users or ten million, utilizing auto-scaling infrastructure.

- SEO Readiness: Unlike many single-page applications (SPAs) that struggle with search visibility, Imagine.bo generates code with SEO strategies for AI-built products in mind, ensuring your app ranks high.

- Human + AI Collaboration: You retain full control. The AI builds the foundation, but you can tweak, iterate, and refine the logic as your business evolves.

The Future of Full Application Development

We are moving toward a future where “coding” is replaced by “curating.” As large language models (LLMs) evolve, the barrier between idea and execution will completely dissolve. We will see the rise of self-optimizing applications that not only build themselves but also analyze their own user data to suggest features and improvements.

In this future, technical literacy won’t be defined by knowing syntax, but by knowing how to articulate a problem. The future of no-code AI is one where the platform acts as your co-founder, taking care of the “how” so you can focus entirely on the “what” and the “why.”

Conclusion

Building software used to be a privilege reserved for those with deep pockets or technical degrees. Today, Imagine.bo has dismantled those barriers. By unlocking the power of AI, we aren’t just speeding up development; we are redefining it. Whether you are a solo entrepreneur or an established enterprise, your guide to building full applications starts here. Stop prototyping and start producing—build your vision with the engineering precision it deserves, all without writing a line of code.

Launch Your App Today

Ready to launch? Skip the tech stress. Describe, Build, Launch in three simple steps.

Build